Retrieval augmented generation — a search problem

You have one shot to deliver the right answer to gain customer trust. Ground your LLMs with retrieval augmented generation (RAG) using the Elasticsearch Platform.

Enhance your RAG workflows with Elasticsearch

Elasticsearch powers RAG with speed, scale, and precision. Built on the world’s most trusted context engineering platform, it grounds large language models (LLMs) in your enterprise data, retrieves multimodal context instantly, and delivers explainable results.

Build RAG-powered applications that retrieve the right information with Elasticsearch. Engineer context with hybrid retrieval, reranking, and summarization, enabling chatbots and agents to deliver accurate responses. With Elastic Agent Builder, turn that context into agents that reason and act on your data.

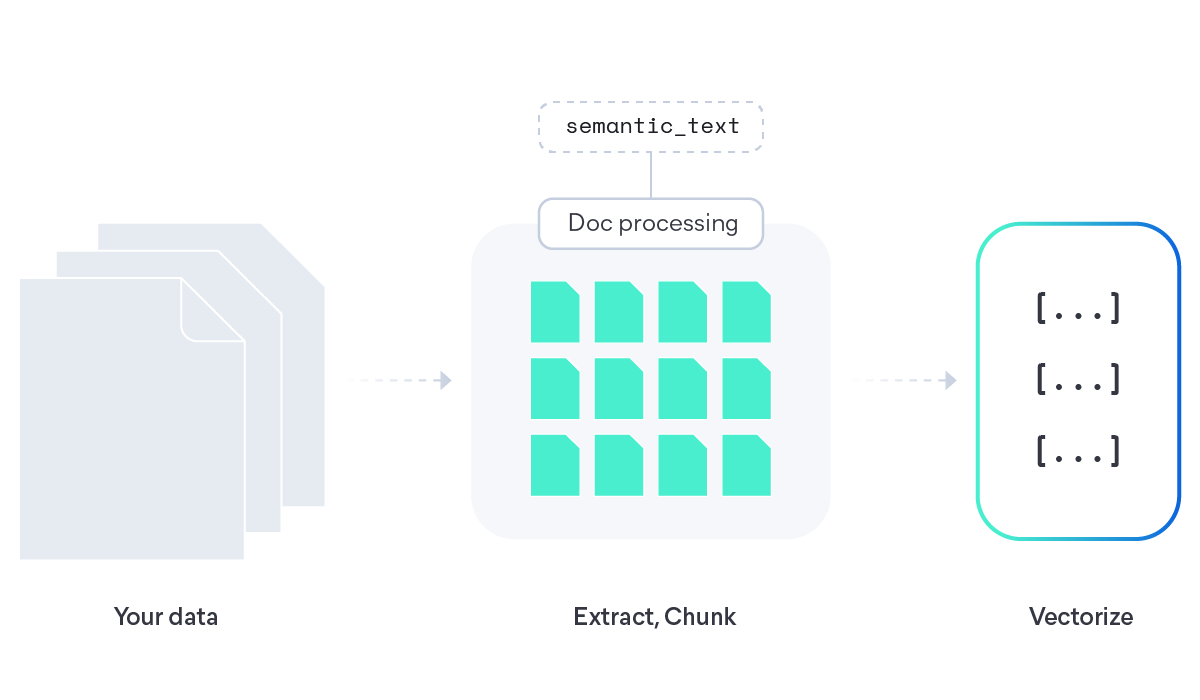

Start with automatic chunking, mapping, and embeddings wired for relevant retrieval, and run ready-to-use models on managed GPUs through Elastic Inference Service to get your RAG chatbot running quickly. Then customize models, quantization, and ranking for your use case.

Search and serve context across billions of documents spanning structured, unstructured, and vector data with millisecond latency, while keeping private data protected through RBAC and document-level permissions. Scale securely across regions with cross-cluster search for federated, enterprise workloads.

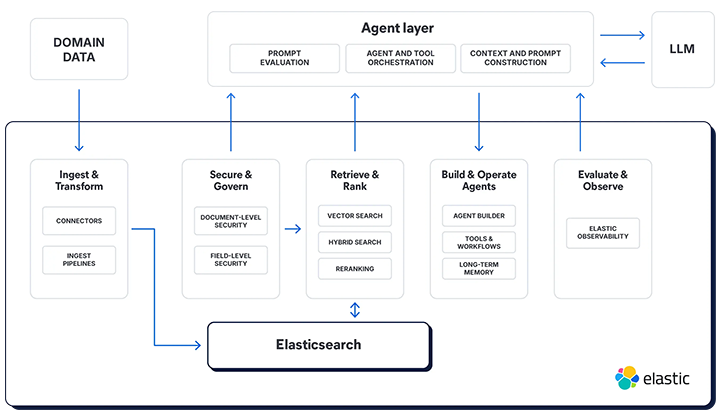

The architecture behind context‑aware RAG

Connect your private data with secure hybrid search and managed inference, ground LLM responses with access controls, and deliver fast, observable, production-ready answers at scale.

What are you building?

Build chat grounded in your data and agents guided by context. Explore our full training catalog or follow along with our tutorials on Elasticsearch Labs.

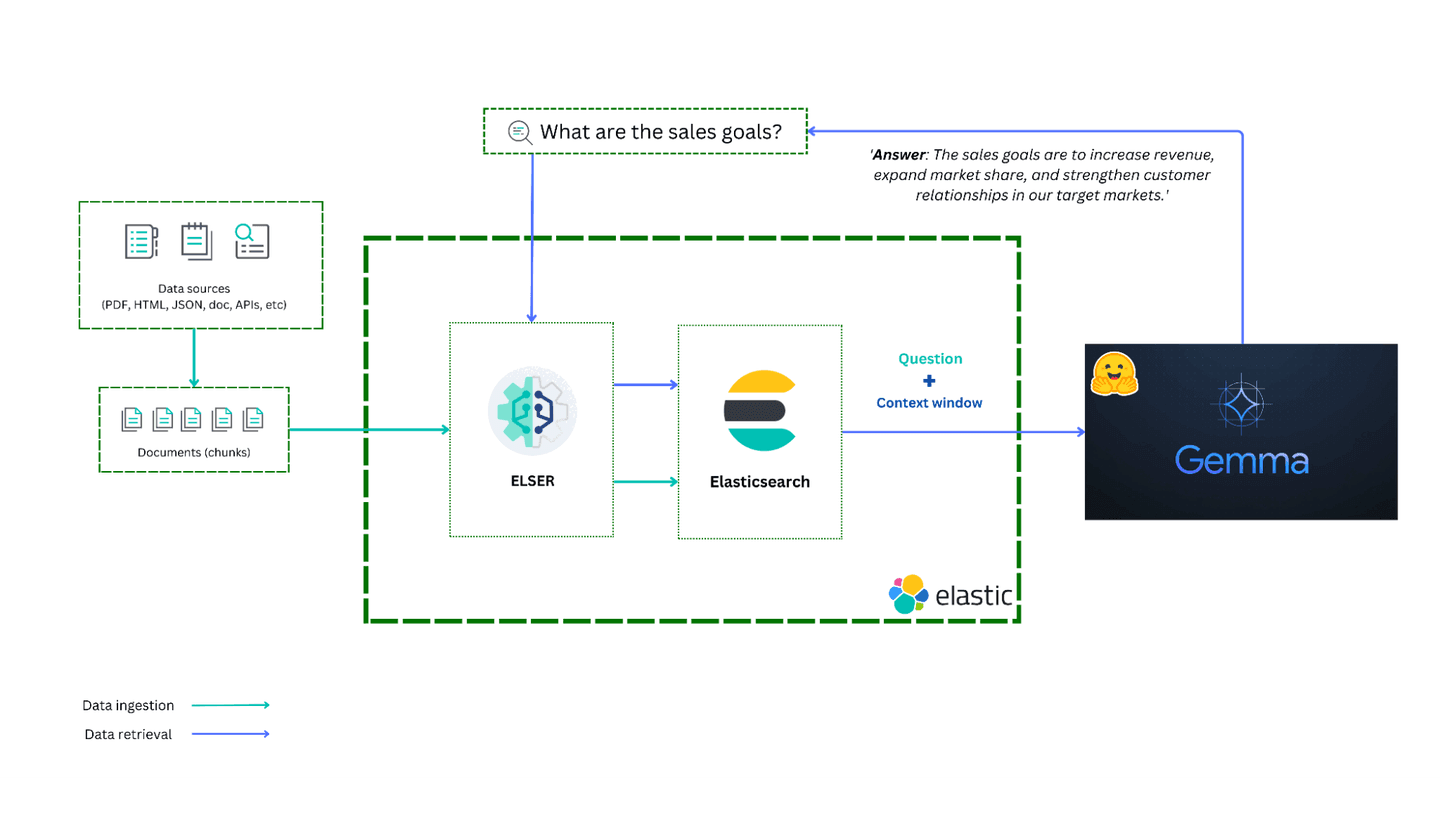

Q&A on your data. Build a RAG system with Gemma, Hugging Face, and Elasticsearch.

Build agentic RAG apps faster with LangGraph and Elasticsearch.

Elastic built a GenAI Support Assistant — explore the architecture, techniques, and best practices to create your own.

Frequently asked questions

Retrieval augmented generation (commonly referred to as RAG) is a natural language processing pattern that enables enterprises to search proprietary data sources and provide context that grounds large language models. This allows for more accurate, real-time responses in generative AI (GenAI) applications.

When implemented optimally, RAG provides secure access to relevant, domain-specific proprietary data in real time. It can reduce the incidence of hallucination in generative AI applications and increase the precision of responses.

Elastic makes RAG production-ready by solving the hardest parts out of the box: ingesting and grounding high-quality data, delivering accurate and efficient retrieval at scale, enforcing role- and document-level security, and preserving source attribution for trustworthy responses. With native vector, lexical, and hybrid retrieval; first-party models like ELSER and flexible third-party model integrations across the GenAI ecosystem; and proven performance at enterprise scale, Elastic helps teams build RAG systems that are faster to launch, easier to tune, and reliable in production.

Elasticsearch is built for relevance at scale, which is the foundation of context engineering. It brings together vector, keyword, and structured search with analytics, inference, and observability in a single platform. This makes it easy for developers to store, retrieve, and rank structured and unstructured business data with precision, so agents always get the right context.

With Agent Builder, Elasticsearch takes this further by bringing chat, retrieval, tool creation, and orchestration directly into the platform. Developers can build, test, and scale context-driven agents in minutes using their own data, models, and tools, all supported by Elasticsearch relevance, security, and performance.