For system administrators and SREs, Windows Event Logs are both a goldmine and a graveyard. They contain the critical data needed to diagnose the root cause of a server crash or a security breach, but they are often buried under gigabytes of noise. Traditionally, extracting value from these logs required brittle regex parsers, manual rule creation, and a significant amount of human intuition.

However, the landscape of log management is shifting. By combining the industry-standard ingestion of OpenTelemetry (OTel) with the AI-driven capabilities of Elastic Streams, we can change how we monitor Windows infrastructure. This approach isn't just moving data. We are also using Large Language Models (LLMs) to understand it.

The Challenge with Traditional Windows Logging

Windows generates a massive variety of logs: System, Security, Application, Setup, and Forwarded Events. Within those categories, you have thousands of Event IDs. Historically, getting this data into an observability platform involved installing proprietary agents and configuring complex pipelines to strip out the XML headers and format the messages.

Once the data was ingested, we can try to figure out what "bad" looked like. You had to know in advance that Event ID 7031 indicated a service crash, and then write a specific alert for it. If you missed a specific Event ID or if the format changed, your monitoring went dark.

Step 1: Ingestion via OpenTelemetry

The first step in modernizing this workflow is adopting OpenTelemetry. The OTel collector has matured significantly and now offers robust support for Windows environments. By installing the collector directly on Windows servers, you can configure receivers to tap into the event log subsystems.

The beauty of this approach is standardization. You aren't locked into a vendor-specific shipping agent. The OTel collector acts as a universal router, grabbing the logs and sending them to your observability backend in this case, the Elastic logs index designed to handle high-throughput streams.

The key thing to pay attention to in this configuration is how we add this transform statement:

transform/logs-streams:

log_statements:

- context: resource

statements:

- set(attributes["elasticsearch.index"], "logs")

This works with the vanilla opentelemetry collector and when the data arrives in Elastic, it tells Elastic to use the new wired streams feature which enables all the downstream AI features we discuss in later steps.

Checkout my example configuration here

Step 2: AI-Driven Partitioning

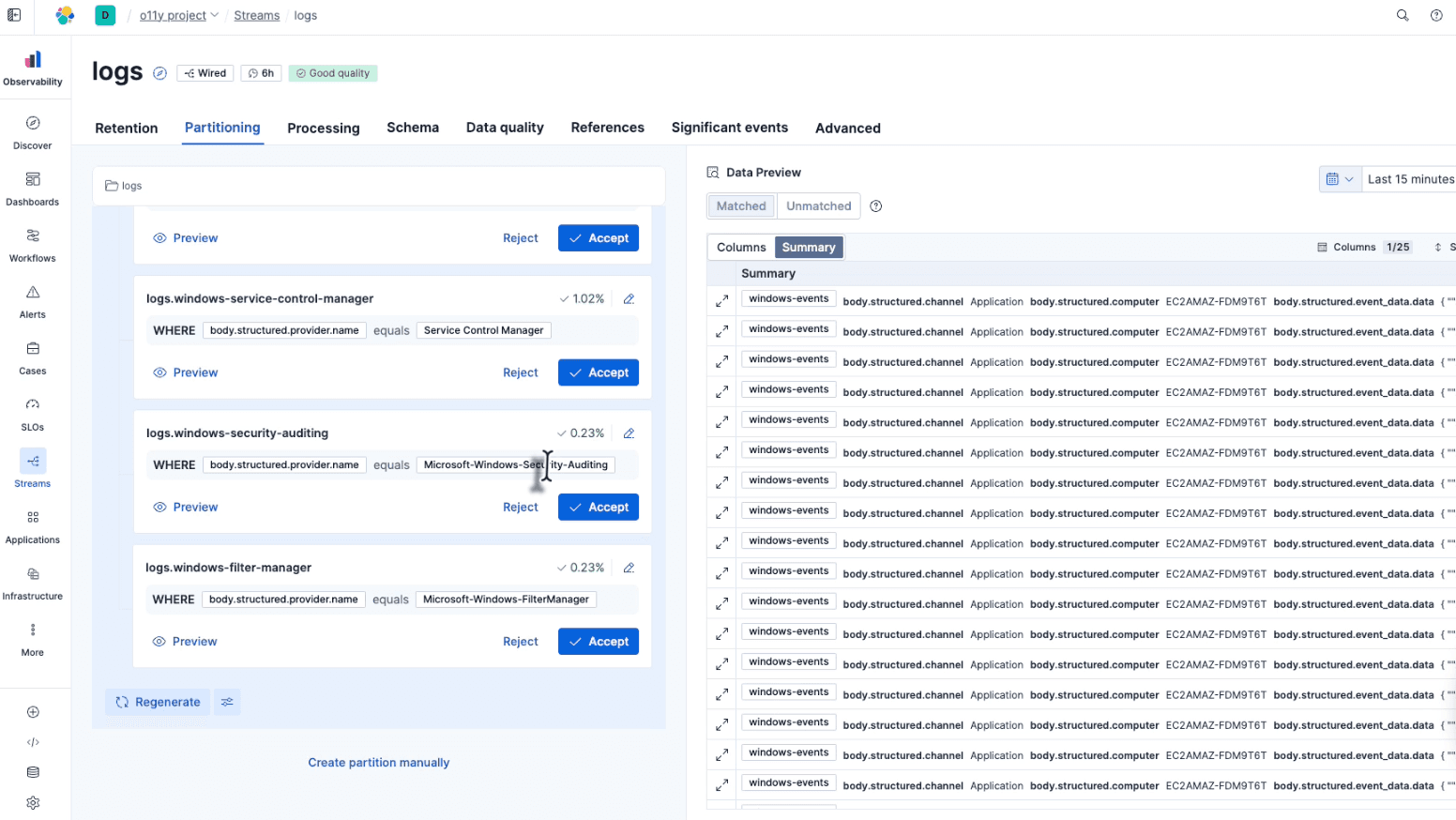

Once the data arrives, the next challenge is organization. Dumping all Windows logs into a single

This process involves analyzing the incoming stream to identify patterns. The system looks at the structure and content of the logs to determine their origin. For example, it can distinguish between a

The result is automatic partitioning. The system creates separate, optimized "buckets" or streams for each data type. You get a clean separation of concerns, Security logs go to one stream, File Manager logs to another, without having to write a single conditional routing rule. This partitioning is crucial for performance and for the next phase of the process: analysis.

Step 3: Significant Events and LLM Analysis

Once your data is partitioned (e.g., into a dedicated

In a traditional setup, the system sees text strings. In an AI-driven setup, the system understands context. When an LLM analyzes the

Because the model understands the purpose of the log stream, it can generate suggestions for what constitutes a "Significant Event." It doesn't need you to tell it to look for crashes; it knows that for a Service Manager, a crash is a critical failure.

From Passive Storage to Proactive Suggestions

The workflow effectively automates the creation of detection rules. The LLM scans the logs and generates a list of potential problems relevant to that specific dataset, such as:

- Service Crashes: High severity anomalies where background processes terminate unexpectedly.

- Startup/Boot Failures: Critical errors preventing the OS from reaching a stable state.

- Permission Denials: Security-relevant events regarding service interactions.

It bubbles these up as suggested observations. You can review a list of potential issues, see the severity the AI has assigned to them (e.g., Critical, Warning), and with a single click, generate the query required to find those logs.

Conclusion

The combination of OpenTelemetry for standardized ingestion and AI-driven Streams for analysis turns the chaotic flood of Windows logs into a structured, actionable intelligence source. We are moving away from the era of "log everything, look at nothing" to an era where our tools understand our infrastructure as well as we do.

The barrier to effective monitoring is no longer technical complexity. Whether you are tracking security audits or debugging boot loops, leveraging LLMs to partition and analyze your streams is the new standard for observability.