Elasticsearch Query Language (ES|QL) is a new instruction language in pipes aimed at allowing users to link different operations in a step-by-step fashion. It’s a language optimized for data analysis, besides working in a new architecture designed to analyze large data volumes with high efficiency.

You can learn more about ES|QL in this article and the documentation.

ES|QL queries allow you to build the response in different formats, such as JSON, CSV, TSV, YAML, Arrow, and binary. Starting in Elasticsearch 8.16, the Node.js client includes helpers to handle some of these formats.

This article will cover the newest helpers, toArrowReader and toArrowTable, which support Apache Arrow specifically in the Elasticsearch Node.js client. For more on helpers, check out this article.

What is Apache Arrow?

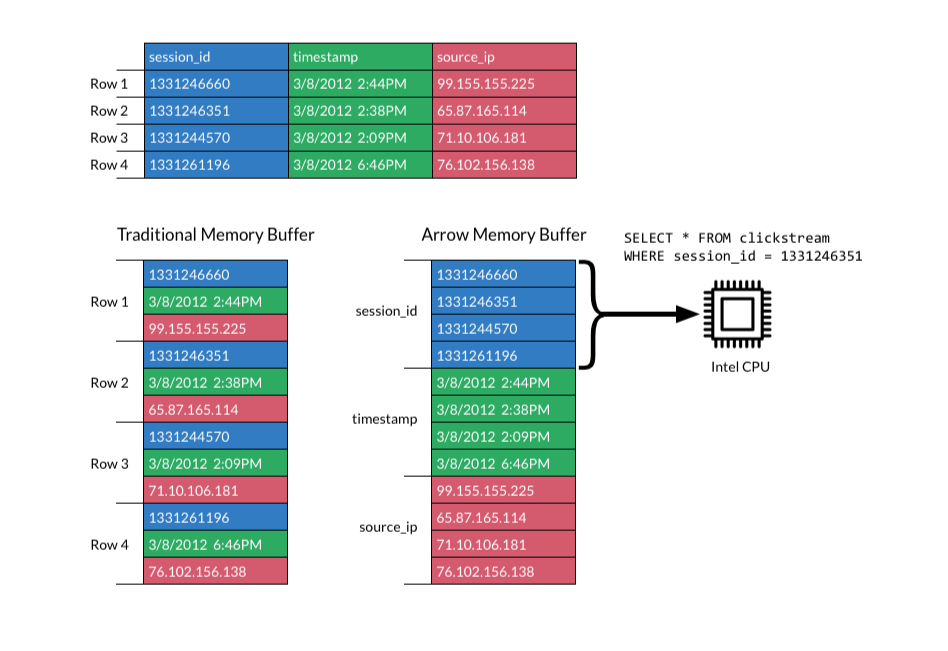

Apache Arrow is a columnar data analysis tool that uses an agnostic format across the programming language of modern environments.

One of the primary benefits of the Arrow format is that its binary, columnar format is optimized for very fast reads, enabling high-performance analytics calculations.

Read more about how to leverage Arrow with ES|QL in this article.

ES|QL Apache Arrow helpers

For the examples, we are going to use Elastic’s Web logs sample dataset. You can ingest it by following this documentation.

Elasticsearch client

Set up the Elasticsearch client by specifying your Elasticsearch endpoint URL and API Key.

const { Client } = require("@elastic/elasticsearch");

const esClient = new Client({

node: "ELASTICSEARCH_ENDPOINT",

auth: { apiKey: "ELASTICSEARCH_API_KEY" },

});toArrowReader

The toArrowReader helper is provided to optimize memory by not loading the entire result set into memory at once, but rather by streaming it in batches. This makes it possible to perform calculations on very large data sets without exhausting your system's memory.

This helper allows you to process each row:

const q = `FROM kibana_sample_data_logs

| KEEP message, response, tags, @timestamp, ip, agent

| LIMIT 2 `;

const reader = await esClient.helpers.esql({ query: q }).toArrowReader();

const toArrowReaderResults = [];

for await (const recordBatch of reader) {

for (const record of recordBatch) {

const recordData = record.toJSON();

toArrowReaderResults.push(recordData);

}

}

console.log(JSON.stringify(toArrowReaderResults, null, 2));

/*

RESULT:

[

{

"message": "49.167.60.184 - - [2018-09-16T09:10:01.825Z] \"GET /kibana/kibana-6.3.2-darwin-x86_64.tar.gz HTTP/1.1\" 200 2603 \"-\" \"Mozilla/5.0 (X11; Linux i686) AppleWebKit/534.24 (KHTML, like Gecko) Chrome/11.0.696.50 Safari/534.24\"",

"response": "200",

"tags": [

"error",

"info"

],

"@timestamp": 1749373801825,

"ip": {

"0": 49,

"1": 167,

"2": 60,

"3": 184

},

"agent": "Mozilla/5.0 (X11; Linux i686) AppleWebKit/534.24 (KHTML, like Gecko) Chrome/11.0.696.50 Safari/534.24"

},

{

"message": "225.72.201.213 - - [2018-09-16T09:37:35.555Z] \"GET /elasticsearch/elasticsearch-6.3.2.zip HTTP/1.1\" 200 6335 \"-\" \"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; .NET CLR 1.1.4322)\"",

"response": "200",

"tags": [

"success",

"info"

],

"@timestamp": 1749375455555,

"ip": {

"0": 225,

"1": 72,

"2": 201,

"3": 213

},

"agent": "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; .NET CLR 1.1.4322)"

}

]

*/toArrowTable

We can use toArrowTable if we want to load all the results into an Arrow table object once the request is completed, instead of returning each row as a stream.

This helper is useful if your dataset will easily fit in memory and you still want to leverage Arrow’s zero-copy reads and compact transfer size while keeping the code simple.

toArrowTable is also a good option if the application is already working with Arrow data, since you don’t need to serialize the data. In addition, given that Arrow is language-agnostic, you can use it regardless of the platform and language.

const q = `FROM kibana_sample_data_logs

| KEEP message, response, tags, @timestamp, ip, agent

| LIMIT 2 `;

const toArrowTableResults = await esClient.helpers

.esql({ query: q })

.toArrowTable();

const arrayTable = toArrowTableResults.toArray();

console.log(JSON.stringify(arrayTable, null, 2));

/*

RESULT:

[

{

"message": "49.167.60.184 - - [2018-09-16T09:10:01.825Z] \"GET /kibana/kibana-6.3.2-darwin-x86_64.tar.gz HTTP/1.1\" 200 2603 \"-\" \"Mozilla/5.0 (X11; Linux i686) AppleWebKit/534.24 (KHTML, like Gecko) Chrome/11.0.696.50 Safari/534.24\"",

"response": "200",

"tags": [

"error",

"info"

],

"@timestamp": 1749373801825,

"ip": {

"0": 49,

"1": 167,

"2": 60,

"3": 184

},

"agent": "Mozilla/5.0 (X11; Linux i686) AppleWebKit/534.24 (KHTML, like Gecko) Chrome/11.0.696.50 Safari/534.24"

},

{

"message": "225.72.201.213 - - [2018-09-16T09:37:35.555Z] \"GET /elasticsearch/elasticsearch-6.3.2.zip HTTP/1.1\" 200 6335 \"-\" \"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; .NET CLR 1.1.4322)\"",

"response": "200",

"tags": [

"success",

"info"

],

"@timestamp": 1749375455555,

"ip": {

"0": 225,

"1": 72,

"2": 201,

"3": 213

},

"agent": "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; .NET CLR 1.1.4322)"

}

]

*/Conclusion

The Apache Arrow helpers provided by the Elasticsearch Node.js client help facilitate day-to-day tasks like analyzing large data sets efficiently and receiving Elasticsearch responses in a compact and language-agnostic format.

In this article, we learned how to use the ES|QL client helpers to parse the Elasticsearch response as an Arrow Reader or an Arrow Table.

Ready to try this out on your own? Start a free trial.

Want to get Elastic certified? Find out when the next Elasticsearch Engineer training is running!

Related content

September 18, 2025

Elasticsearch’s ES|QL Editor experience vs. OpenSearch’s PPL Event Analyzer

Discover how ES|QL Editor’s advanced features accelerate your workflow, directly contrasting OpenSearch’s PPL Event Analyzer’s manual approach.

Introducing the ES|QL query builder for the Elasticsearch Ruby Client

Learn how to use the recently released ES|QL query builder for the Elasticsearch Ruby Client. A tool to build ES|QL queries more easily with Ruby code.

Introducing the ES|QL query builder for the Python Elasticsearch Client

Learn how to use the ES|QL query builder, a new Python Elasticsearch client feature that makes it easier to construct ES|QL queries using a familiar Python syntax.

Using ES|QL COMPLETION + an LLM to write a Chuck Norris fact generator in 5 minutes

Discover how to use the ES|QL COMPLETION command to turn your Elasticsearch data into creative output using an LLM in just a few lines of code.

July 29, 2025

Introducing a more powerful, resilient, and observable ES|QL in Elasticsearch 8.19 & 9.1

Exploring ES|QL enhancements in Elasticsearch 8.19 & 9.1, including built-in resilience to failures, new monitoring and observability capabilities, and more.