In the world of AI-powered search, efficiently finding the right data within vast datasets is paramount. Traditional keyword-based searches often fall short when dealing with queries involving natural language, which is where Semantic Search comes in. However, if you want to combine the power of Semantic Search with the ability to filter on structured metadata like dates and number values, that’s where self-querying retrievers come into play.

Self-querying retrievers offer a powerful way to leverage metadata for more precise and nuanced searches. When combined with the search and indexing capabilities of Elasticsearch, self-querying becomes even more potent, enabling developers to increase the relevance of your RAG applications. This blog post will explore the concept of self-querying retrievers, demonstrate their integration with Elasticsearch using LangChain and Python, and the ways it can help your search become even more powerful!

What are self-querying Retrievers?

Self-querying retrievers are a feature provided by LangChain, bridging the gap between natural language queries and structured metadata filtering. Instead of relying solely on keyword matching against document content, they use a large language model (LLM) along with the vector search capabilities of Elasticsearch to parse a user's natural language query and intelligently extract the relevant metadata filters. For example, a user might ask, "Find science fiction movies released after 2000 with a rating above 8." A traditional search engine would struggle with finding the implied meaning without keywords, while semantic search alone would understand the context of the query but would not be able to apply the date and rating filters to get you the best answer. However, a self-querying retriever would analyze the query, identify the metadata fields (genre, year, rating), and generate a structured query that Elasticsearch can understand and execute efficiently. This allows for a more intuitive and user-friendly search experience, where users can express complex search criteria containing filters in plain English.

This all works through a LLM chain where the query is parsed by the LLM to extract filters from the natural language query and then the new structured filters are applied against our documents containing both embeddings and metadata in Elasticsearch.

Source: Langchain

Implementing self-querying Retrievers

Integrating self-querying retrievers with Elasticsearch involves a couple of key steps. In our Python example we’ll be using LangChain’s AzureChatOpenAI and AzureOpenAIEmbeddings, plus ElasticsearchStore to manage this. We begin by bringing in all of our LangChain libraries, setting up our LLM as well as the embeddings model we’ll use to create vectors:

from langchain_openai import AzureOpenAIEmbeddings, AzureChatOpenAI

from langchain_elasticsearch import ElasticsearchStore

from langchain.chains.query_constructor.base import AttributeInfo

from langchain.retrievers.self_query.base import SelfQueryRetriever

from langchain.docstore.document import Document

import osllm = AzureChatOpenAI(

azure_endpoint=os.environ["AZURE_ENDPOINT"],

deployment_name=os.environ["AZURE_OPENAI_DEPLOYMENT_NAME"],

model_name="gpt-4",

api_version="2024-02-15-preview"

)

embeddings = AzureOpenAIEmbeddings(

azure_endpoint=os.environ["AZURE_ENDPOINT"],

model="text-embedding-ada-002"

)In my example I’m using Azure OpenAI for both the LLM (gpt-4) as well as text-embedding-ada-002 for the embeddings. However this should work with any cloud based LLM as well as a local one like Llama 3, same with the embedding model where I’m using OpenAI for ease of use since we’re already using gpt-4.

We then define our documents with metadata and then documents are indexed into Elasticsearch with the established metadata fields:

# --- Define Metadata Attributes ---

metadata_field_info = [

AttributeInfo(

name="year",

description="The year the movie was released",

type="integer",

),

AttributeInfo(

name="rating",

description="The rating of the movie (out of 10)",

type="float",

),

AttributeInfo(

name="genre",

description="The genre of the movie",

type="string",

),

AttributeInfo(

name="director",

description="The director of the movie",

type="string",

),

AttributeInfo(

name="title",

description="The title of the movie",

type="string",

)

]docs = [

Document(

page_content="Following clues to the origin of mankind, a team finds a structure on a distant moon, but they soon realize they are not alone.",

metadata={"year": 2012, "rating": 7.7, "genre": "science fiction", "title": "Prometheus"},

),

...more documentsNext up add them to an Elasticsearch index, the es_store.add_embeddings function will add the documents to an index you chose in the ELASTIC_INDEX_NAME variable, if the index isn’t found in the cluster an index with that name will be created. In my example here I’m using an Elastic Cloud deployment but this will also work with a self-managed cluster:

es_store = ElasticsearchStore(

es_cloud_id=ELASTIC_CLOUD_ID,

es_user=ELASTIC_USERNAME,

es_password=ELASTIC_PASSWORD,

index_name=ELASTIC_INDEX_NAME,

embedding=embeddings,

)

es_store.add_embeddings(text_embeddings=list(zip(texts, doc_embeddings)), metadatas=metadatas)The self-querying retriever is then created, taking a user's query, using an LLM (Azure OpenAI as we set up previously) to interpret it, which then constructs an Elasticsearch query that combines semantic search with metadata filters. This is all executed by docs = retriever.invoke(query):

# --- Create the self-querying Retriever (Using your LLM) ---

retriever = SelfQueryRetriever.from_llm(

llm,

es_store,

"Search for movies",

metadata_field_info,

verbose=True,

)while True:

# Prompt the user for a query

query = input("\nEnter your search query (or type 'exit' to quit): ")

if query.lower() == 'exit':

break

# Execute the query and print the results

print(f"\nQuery: {query}")

docs = retriever.invoke(query)

print(f"Found {len(docs)} documents:")

for doc in docs:

print(doc.page_content)

print(doc.metadata)

print("-" * 20)And there we have it! The query is then executed against the Elasticsearch index, returning the most relevant documents that match both the content and the metadata criteria. This process empowers users to make natural language queries such as in our example below:

Query: What is a highly rated movie from the 1970s?

Found 3 documents:

The aging patriarch of an organized crime dynasty transfers control of his clandestine empire to his reluctant son.

{'year': 1972, 'rating': 9.2, 'genre': 'crime', 'title': 'The Godfather'}

--------------------

Three men walk into the Zone, three men walk out of the Zone

{'year': 1979, 'director': 'Andrei Tarkovsky', 'genre': 'thriller', 'rating': 9.9, 'title': 'Stalker'}

--------------------

Four armed men hijack a New York City subway car and demand a ransom for the passengers

{'year': 1974, 'rating': 7.6, 'director': 'Joseph Sargent', 'genre': 'action', 'title': 'The Taking of Pelham One Two Three'}Conclusion

While self-querying retrievers offer some significant advantages, it's important to consider their limitations:

- Self-querying retrievers rely on the accuracy of the LLM in interpreting the user's query and extracting the correct metadata filters. If the query is excessively ambiguous or if the metadata is poorly defined, the retriever may produce incorrect or incomplete results.

- The performance of the self-querying process depends on the complexity of the query and the size of the dataset. For extremely large datasets or very complex queries, the LLM processing and query construction can introduce some overhead.

- The cost of using LLMs for query interpretation should be considered, especially for high-traffic applications.

Despite these considerations, self-querying retrievers represent a powerful augmentation to information retrieval, especially when combined with the scalability and power of Elasticsearch, offering a compelling solution for building Search AI applications.

Interested in trying it out yourself? Start a free cloud trial and check out the sample code here. Interested in other retrievers you can use within the Elastic Stack such as Reciprocal Rank Fusion? See our documentation here to learn more.

Ready to try this out on your own? Start a free trial.

Elasticsearch has integrations for tools from LangChain, Cohere and more. Join our Beyond RAG Basics webinar to build your next GenAI app!

Related content

February 26, 2025

Embeddings and reranking with Alibaba Cloud AI Service

Using Alibaba Cloud AI Service features with Elastic.

February 14, 2025

Using Ollama with the Inference API

The Ollama API is compatible with the OpenAI API so it's very easy to integrate Ollama with Elasticsearch.

February 21, 2025

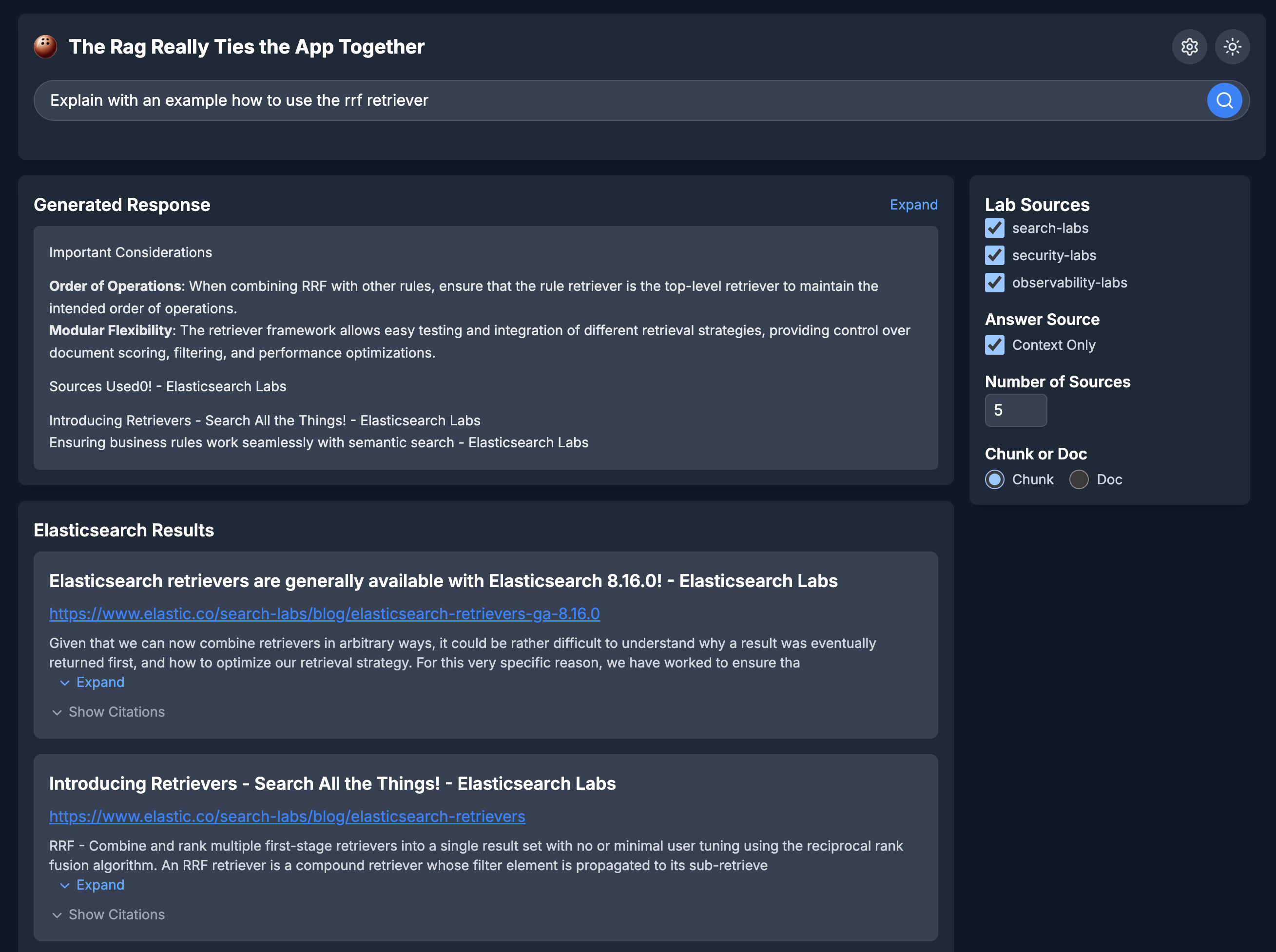

ChatGPT and Elasticsearch revisited: Part 2 - The UI Abides

This blog expands on Part 1 by introducing a fully functional web UI for our RAG-based search system. By the end, you'll have a working interface that ties the retrieval, search, and generation process together—while keeping things easy to tweak and explore.

February 11, 2025

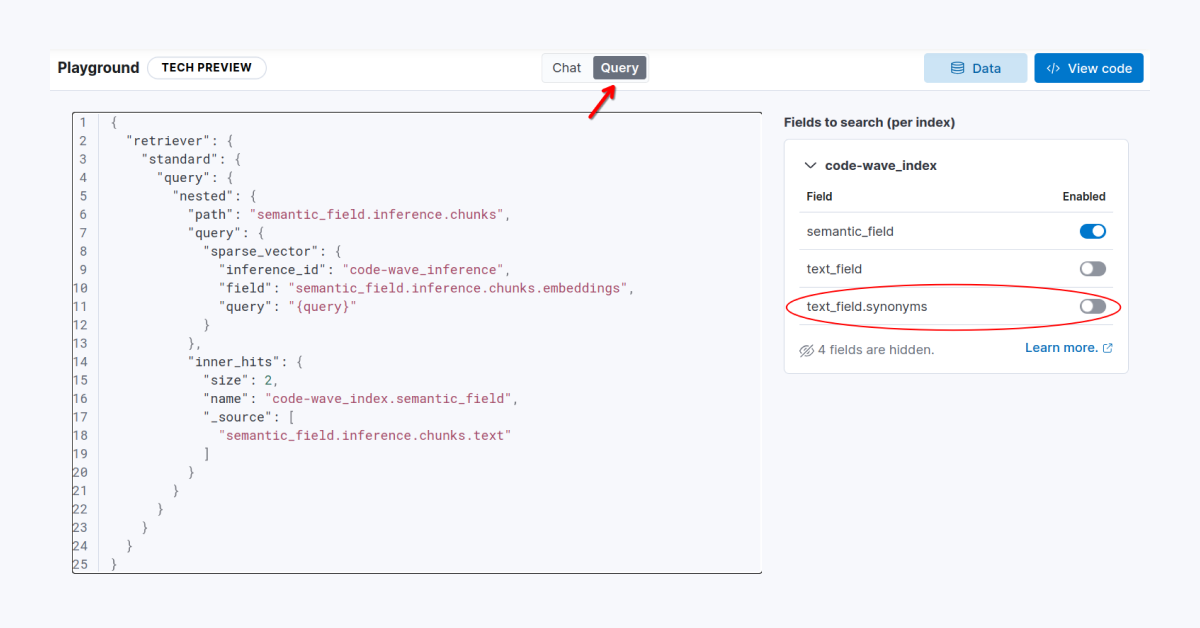

Are synonyms important in RAG?

Exploring the functionality of Elasticsearch synonyms in a RAG application.

January 30, 2025

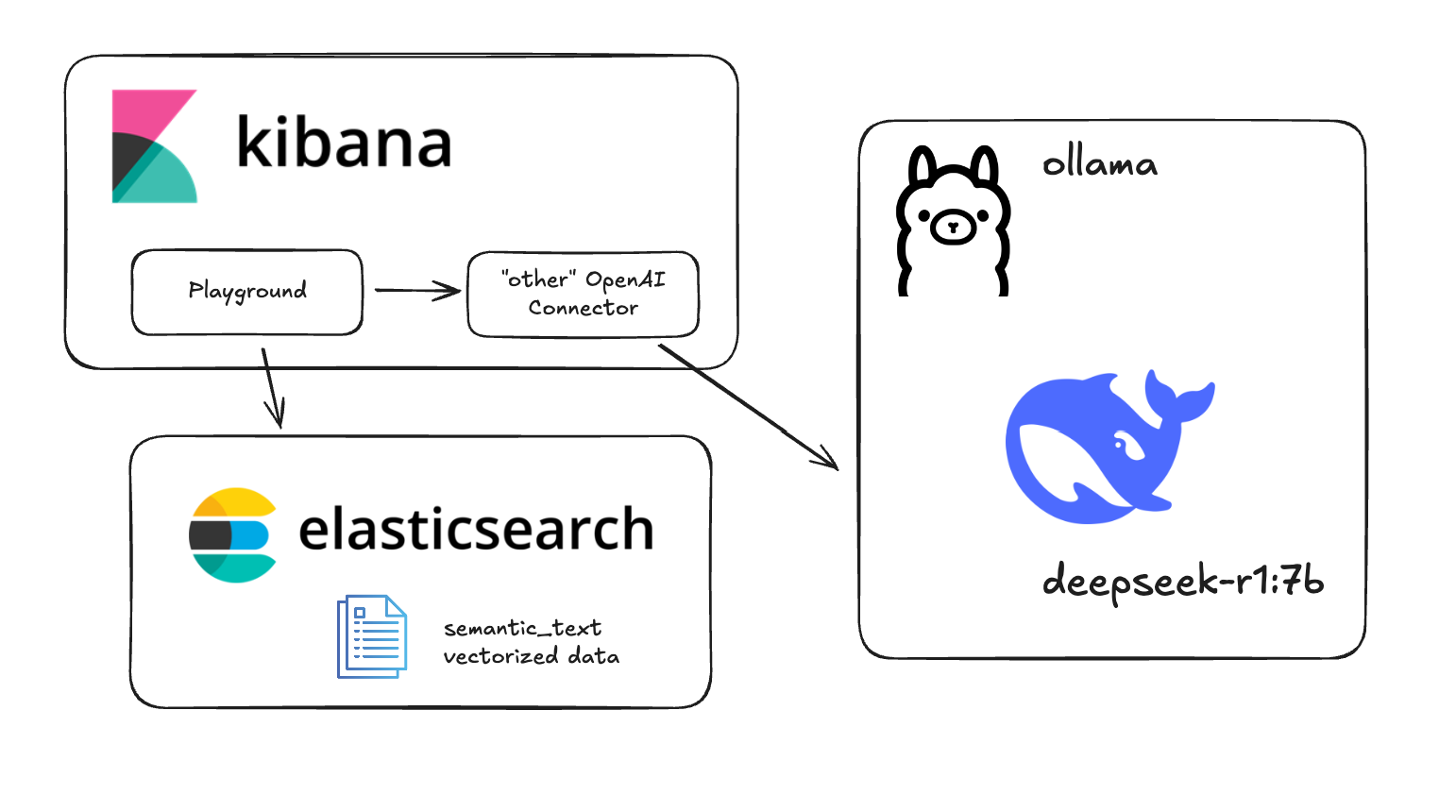

Testing DeepSeek R1 locally for RAG with Ollama and Kibana

Learn how to run a local instance of DeepSeek and connect to it from within Kibana.