Case deflection is a Customer Service (CS) strategy in which customers can resolve their issues on their own before creating a support ticket, thus saving time for them and the CS executives. However, it only works if you can provide the customers with what they need for each problem and make sure the knowledge base you use is always up to date.

The Elastic AI Assistant, through its Knowledge Base, helps you keep your information updated using a UI in Kibana to add/modify/remove knowledge and generate an index for semantic search. This index can be loaded into Playground to build a RAG application.

In this article, we'll explore these features by creating a self-service app that will help users solve problems before reaching out to support. We’ll use the Knowledge Base UI to keep the information updated and then deploy an example app in Streamlit to see how simple it is to integrate it thanks to Playground.

Steps

- Configure AI Assistant

- Upload knowledge base

- Testing with Playground

- Configuring system prompt

- Deploying the app

Configure AI Assistant

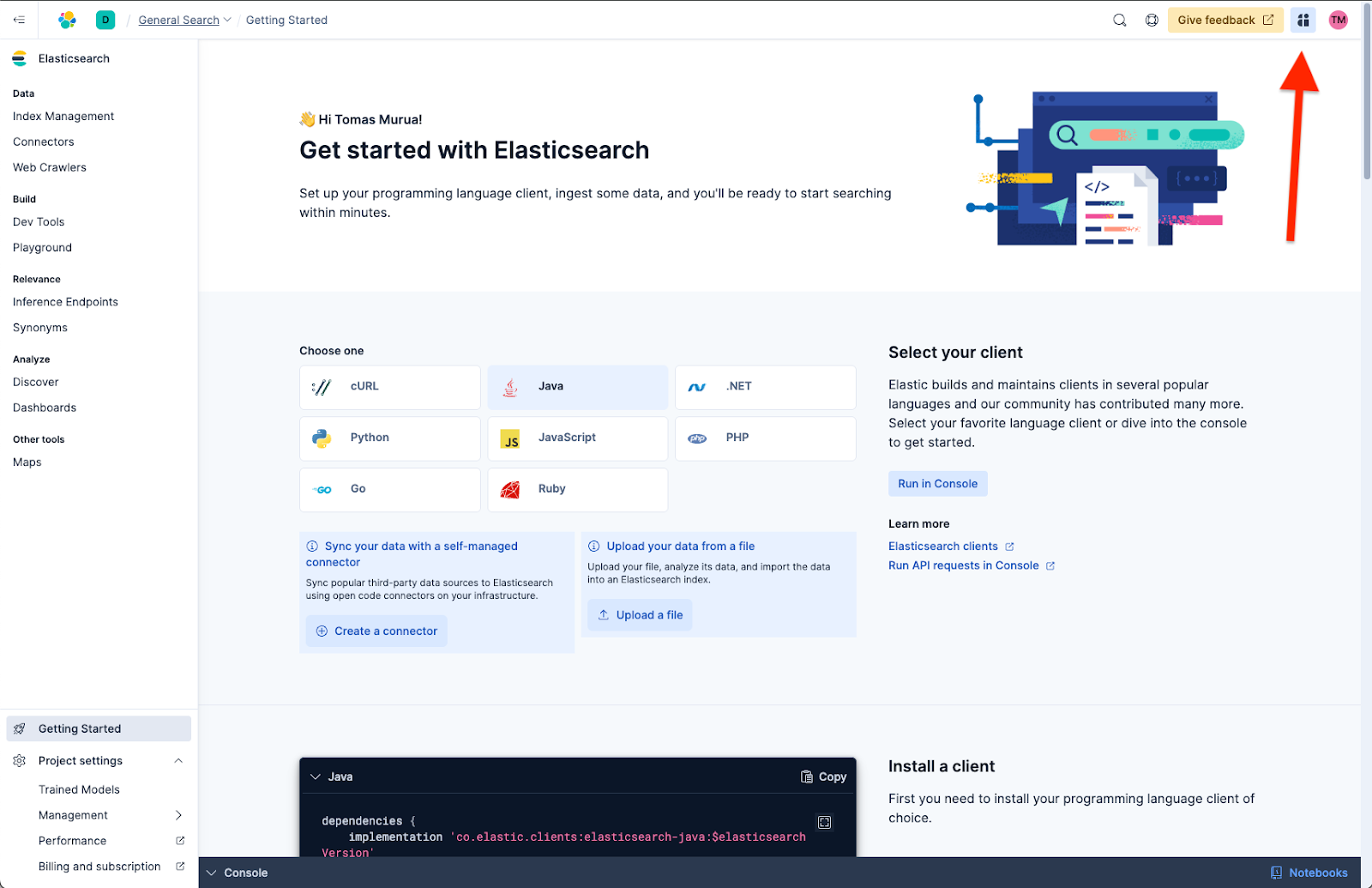

We’ll begin at the Kibana main screen: go to the top right corner and click on AI Assistant.

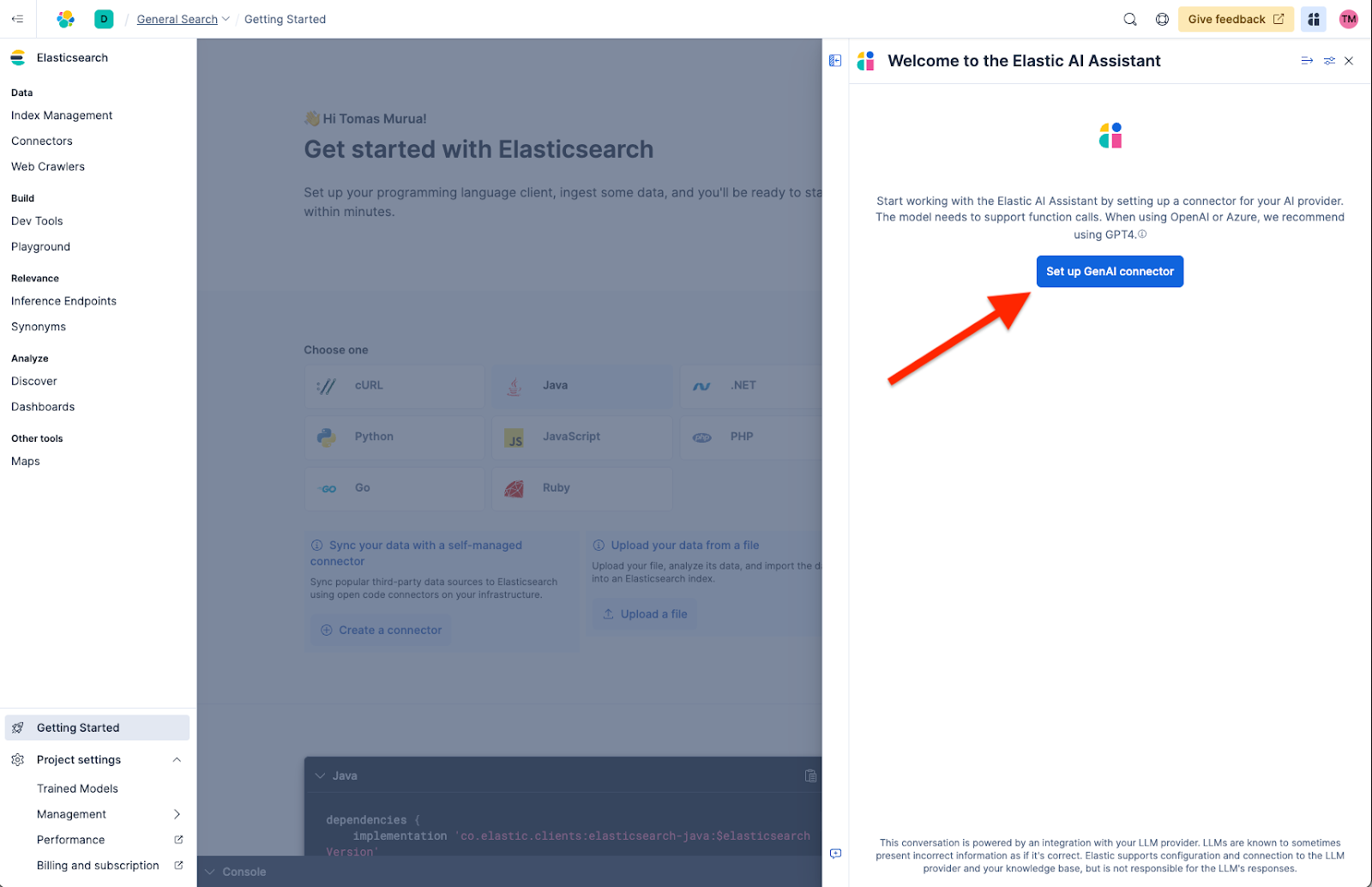

This will open the AI Assistant UI, where we need to configure a GenAI connector to allow our application to connect to a supported LLM provider.

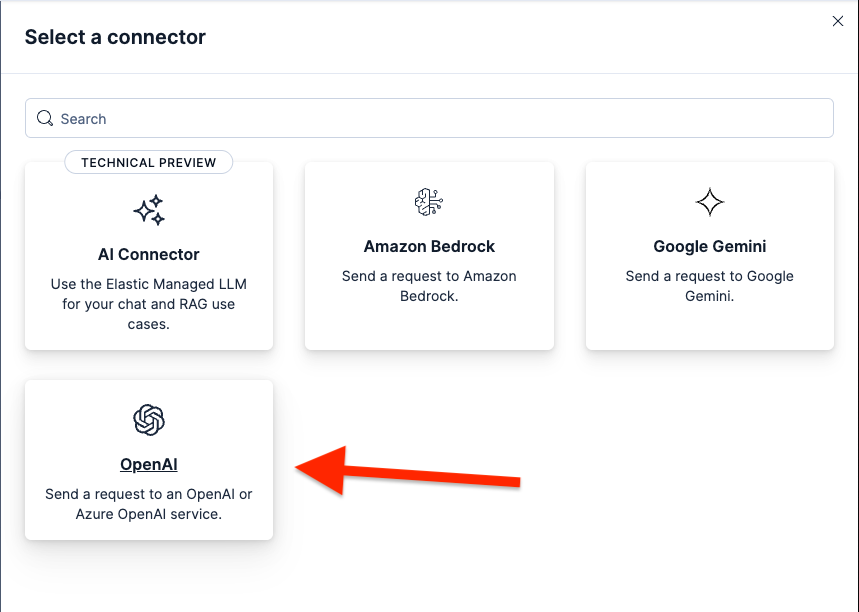

There, we have three main LLM provider options: OpenAI, Amazon Bedrock, and Google Gemini. In this example, we’ll use OpenAI.

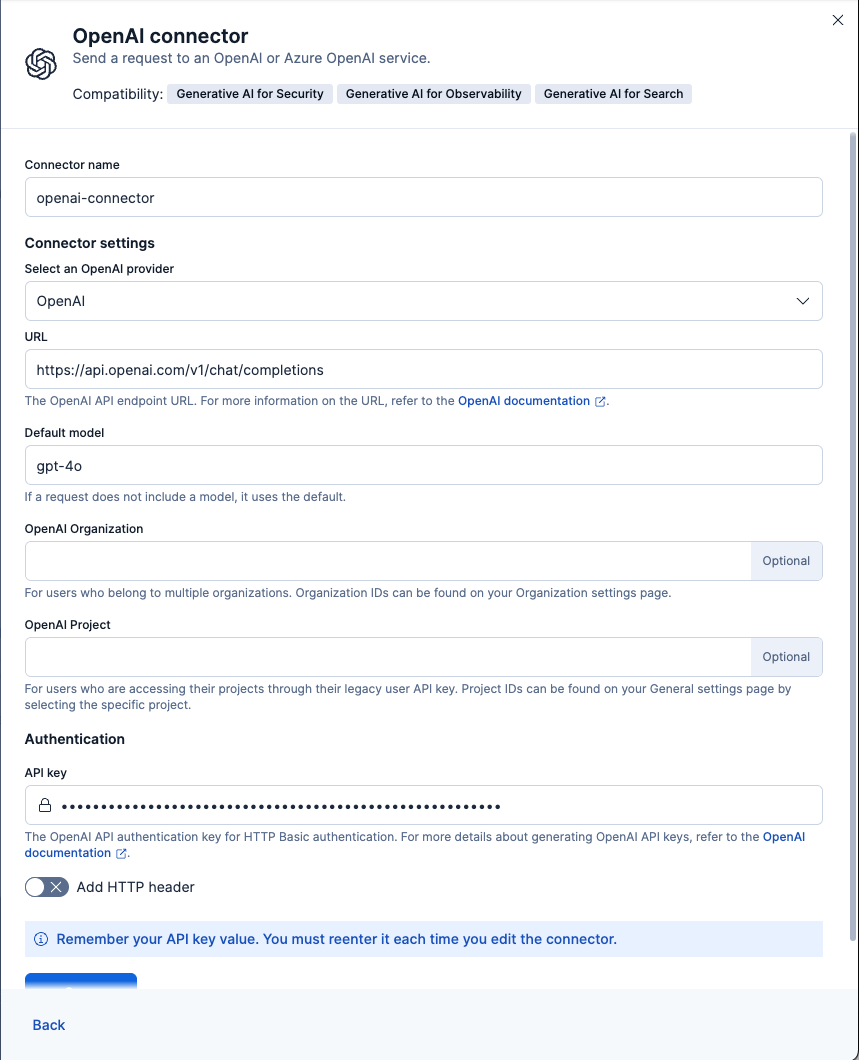

Here, we fill in the connector’s information. Keep in mind that you must have an Open AI API key available.

Required information:

- Connector name

- OpenAI provider

- URL

- Default model

- API key

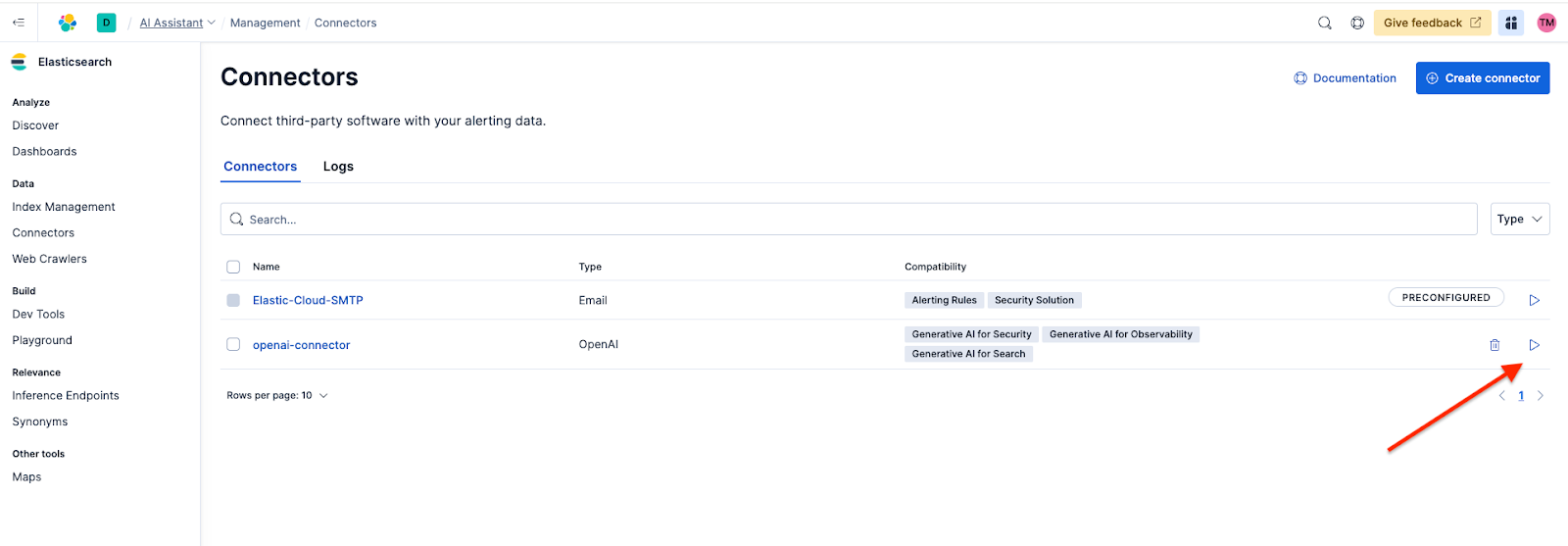

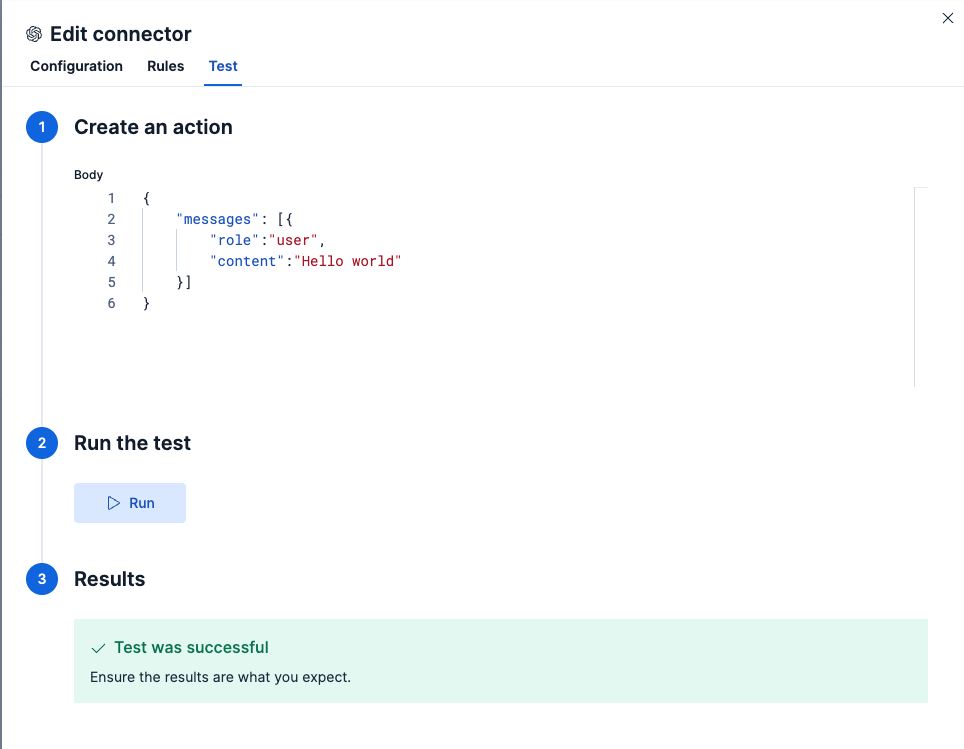

To test your connector, you can go to Management > Connectors and click the play button on the connector we just created.

After that, you can just click Run. You will see a green checkmark below if everything is OK.

Upload knowledge base

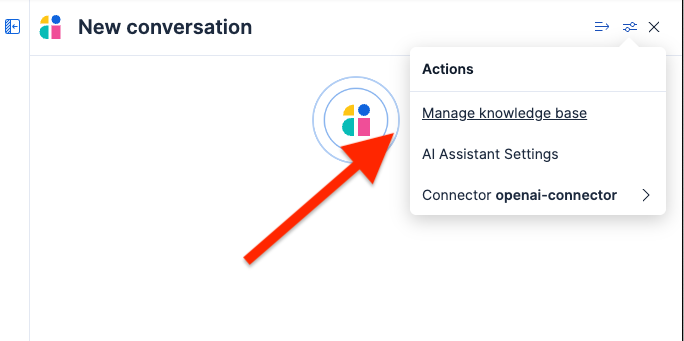

Once our connector is ready, we need to upload our company’s knowledge base to ensure accurate and context-specific answers. To get there, click Actions > Manage knowledge base. This is located under the assistant conversation window.

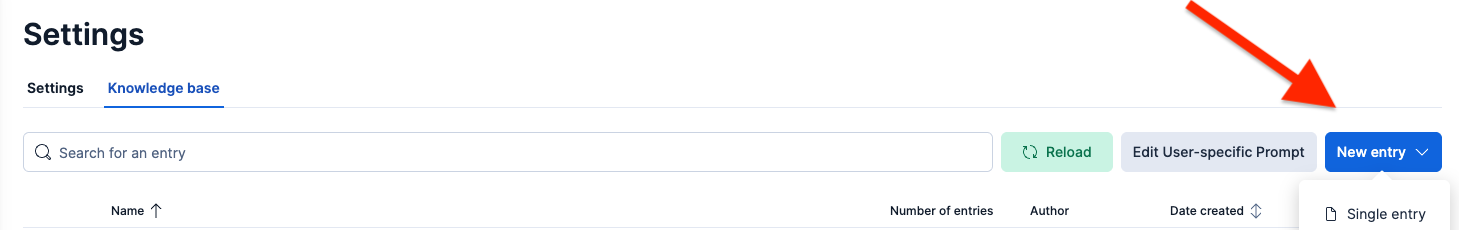

Here, we can choose between two ways of adding knowledge: we can do a single entry upload or do a bulk import in the Newline delimited JSON, or NDJSON format.

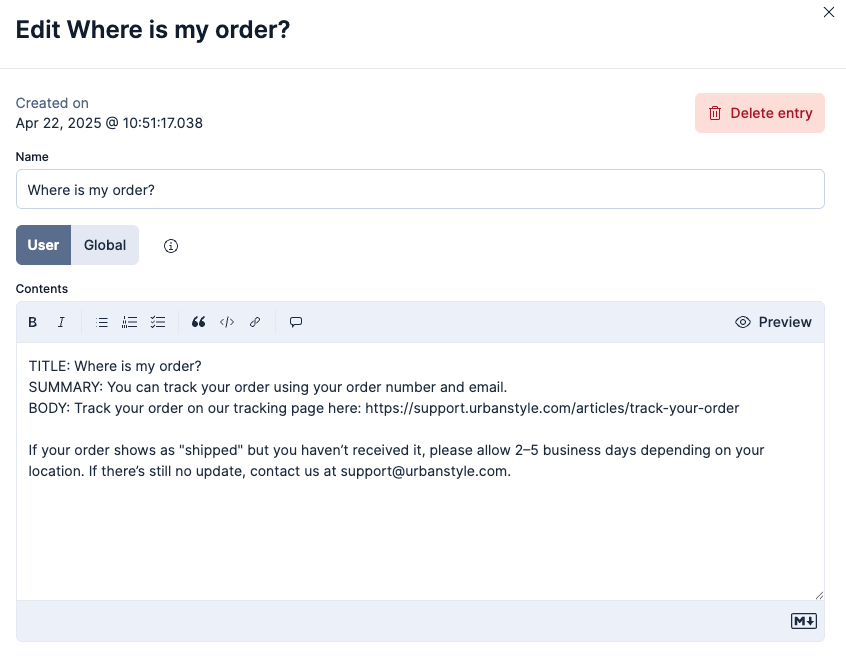

Single-entry upload

You need to write the content below in the UI for a single entry. This option allows you to set a title and a body for your content using a form.

TITLE: Where is my order?

SUMMARY: You can track your order using your order number and email.

BODY: Track your order on our tracking page here: https://support.urbanstyle.com/articles/track-your-order

If your order shows as "shipped" but you haven’t received it, please allow 2–5 business days depending on your location. If there’s still no update, contact us at support@urbanstyle.com.

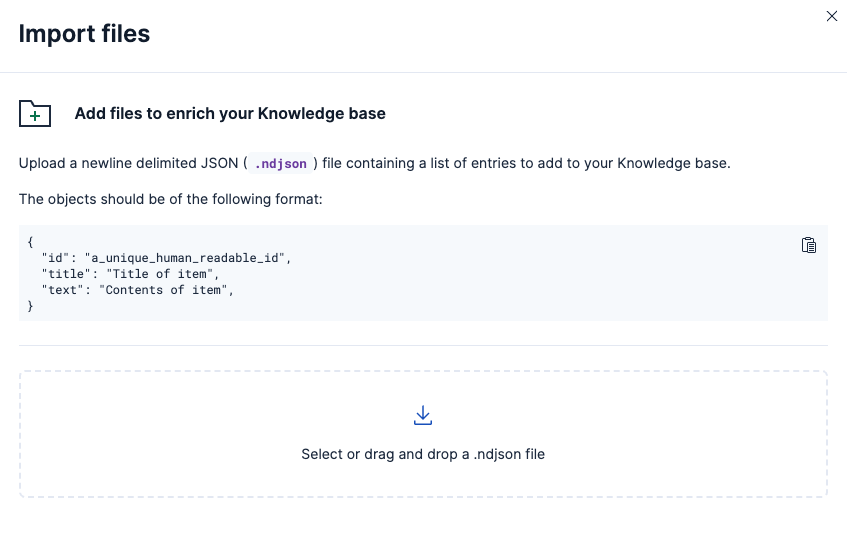

Bulk upload

For this option, we need to use the NDJSON format:

{"id": "a_unique_human_readable_id","title": "Title of item","text":"Contents of item"}*Note the object is in one line.

{ "id": "order_tracking", "title": "Where is my order?", "text": "You can track your order using your order number and email.\n\nTrack your order on our tracking page here: https://support.urbanstyle.com/articles/track-your-order\n\nIf your order shows as \"shipped\" but you haven’t received it, please allow 2–5 business days depending on your location. If there’s still no update, contact us at support@urbanstyle.com." }

{ "id": "refund_request", "title": "How do I request a refund or return?", "text": "Returns are accepted within 30 days of delivery.\n\nWe offer free returns within 30 days from the date of delivery. You can initiate the return process here: https://support.urbanstyle.com/articles/request-a-return\n\nMake sure the item is unused, with original tags and packaging. Refunds are processed within 5–7 business days after we receive the returned item." }

{ "id": "damaged_product", "title": "My product arrived damaged. What do I do?", "text": "Contact support with photos to request a replacement or refund.\n\nWe’re sorry to hear that! Please send us an email with your order number and a photo of the damaged product to: support@urbanstyle.com\n\nMore info on our damaged item policy: https://support.urbanstyle.com/articles/damaged-item-policy" }

{ "id": "shipping_times", "title": "How long does shipping take?", "text": "Standard delivery takes 5–8 business days.\n\nShipping times depend on your location:\n- USA: 5–8 business days\n- Canada: 6–10 business days\n- International: 10–15 business days\n\nGet more details here: https://support.urbanstyle.com/articles/shipping-times" }

{ "id": "update_shipping_address", "title": "How do I update my shipping address?", "text": "Changes can be made before the item is shipped.\n\nIf your order hasn’t shipped yet, you can update your address from your order dashboard: https://urbanstyle.com/account/orders\n\nIf the order is already in transit, we recommend contacting the courier directly or reaching out to our support team." }

{ "id": "account_access", "title": "How do I access or update my account information?", "text": "Log in to your account and go to “Settings”.\n\nTo change your name, email, or password: https://urbanstyle.com/account/settings\n\nIf you're having trouble accessing your account, use the “Forgot Password” link on the login page." }*Note: one line per object, no commas between objects.

And we upload the file:

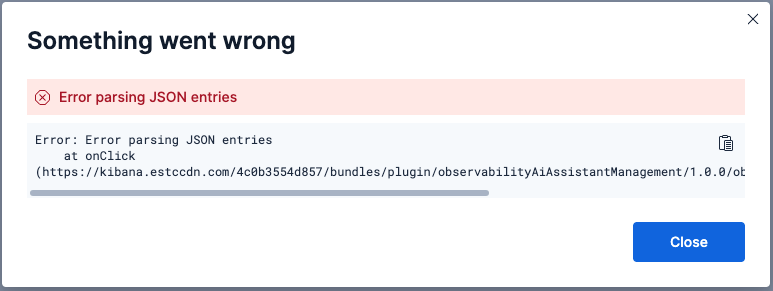

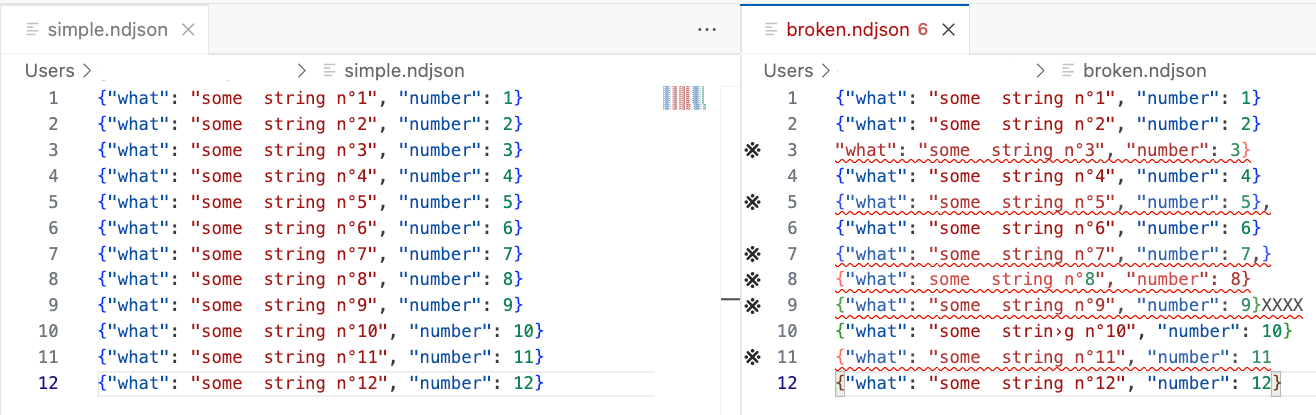

If you see the error below, it probably means that the ndjson file is malformed. Make sure:

- Each JSON object is on a single line

- There are no commas between the JSON objects

- There are no trailing commas (comma at the end of the last attribute of an object)

You can use an online validator, or vscode extension to help find issues with the formatting:

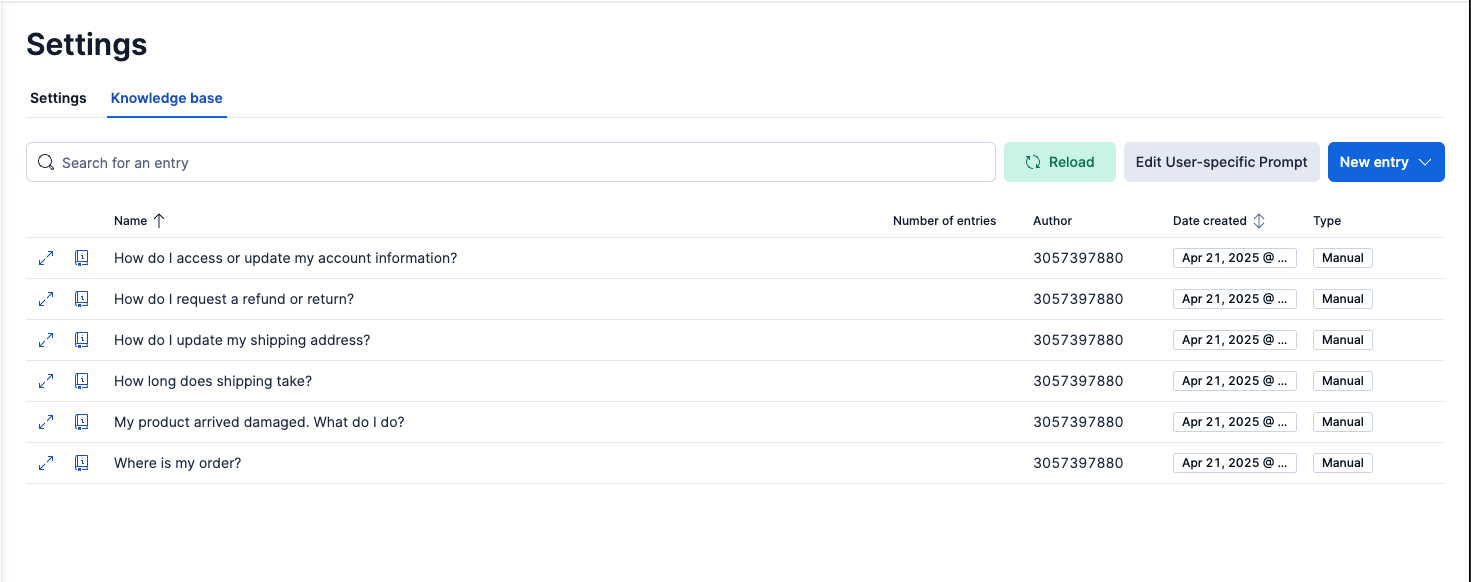

This is how the information should look once it’s uploaded into our knowledge base:

Testing with Playground

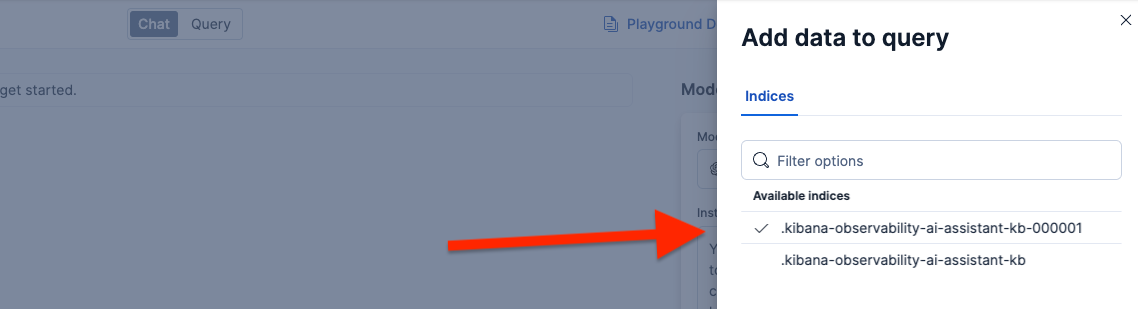

Now that we have the information in our knowledge base, we need to connect the index to Playground.

To do this, we need to unhide the index “.kibana-observability-ai-assistant-kb-000001” since Playground only allows you to work with visible indexes (hidden: false). We can change this property in the DevTools console:

PUT .kibana-observability-ai-assistant-kb-*/_settings

{

"index.hidden": false

}Now that the index is ready, we can start working with Playground and ask questions about the information in our knowledge base. The answers will follow the format we specify in the system prompt.

Here, we select the index we’ve just created:

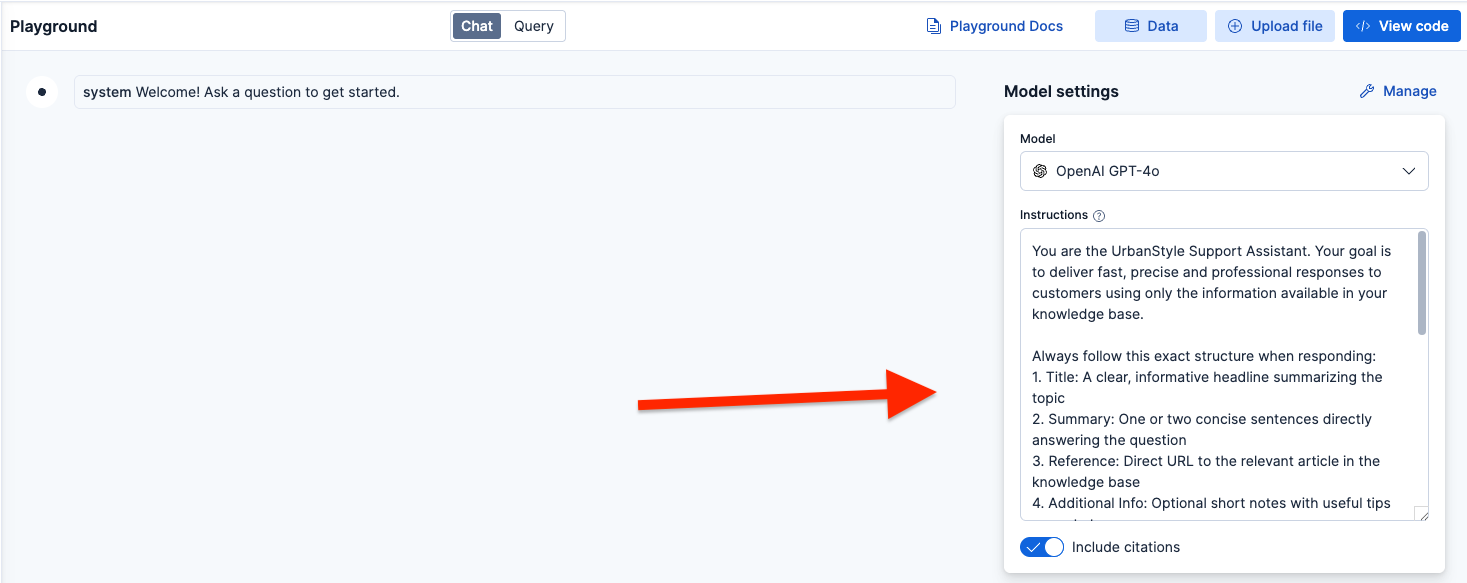

Configuring system prompt

Now, we will create a system prompt to get uniform answers from the AI. We’ll ask it to return:

- Title

- Summary

- Reference URL

- Additional info

- Contact support if no relevant information is found

We’ll also provide additional behavioral guidelines and instructions about what to do if the answer is not in the knowledge base.

You are the UrbanStyle Support Assistant. Your goal is to deliver fast, precise and professional responses to customers using only the information available in your knowledge base.

Always follow this exact structure when responding:

1. Title: A clear, informative headline summarizing the topic

2. Summary: One or two concise sentences directly answering the question

3. Reference: Direct URL to the relevant article in the knowledge base

4. Additional Info: Optional short notes with useful tips or next steps

5. Contact Support Note: Only if no relevant information is found or further human action is required

Guidelines:

• Do not sound like a chatbot or engage in any casual conversation.

• Never include greetings (“Hi”, “Hello”), sign‑offs or apologies.

• Do not use Markdown, asterisks, code blocks or JSON formatting.

• Never fabricate information or links. Only return what exists in the knowledge base.

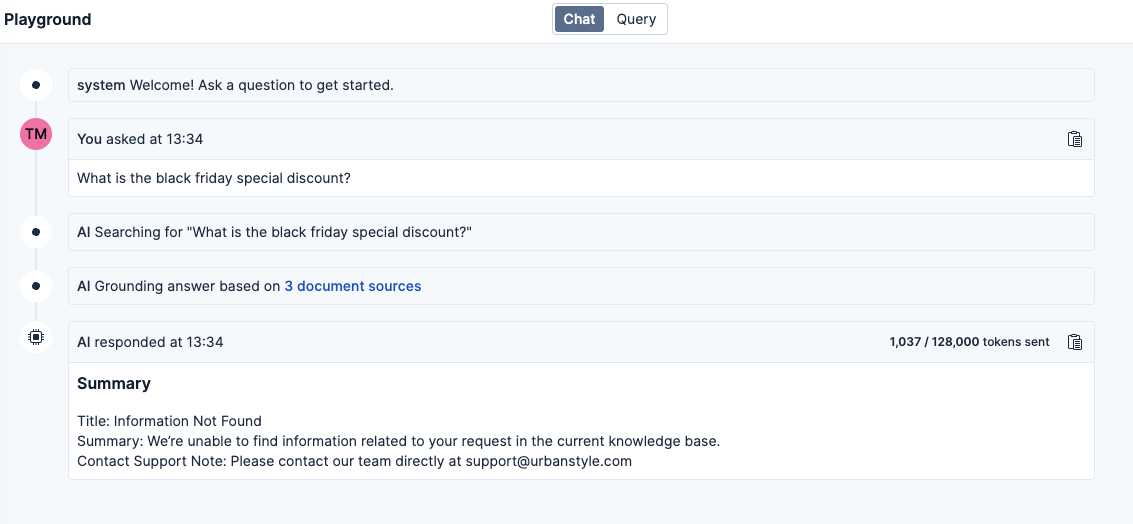

• If you cannot find an article matching the request, reply exactly:

Title: Information Not Found

Summary: We’re unable to find information related to your request in the current knowledge base.

Contact Support Note: Please contact our team directly at support@urbanstyle.comWe’ll add this to the Playground instructions so that the LLM answer has the format we want:

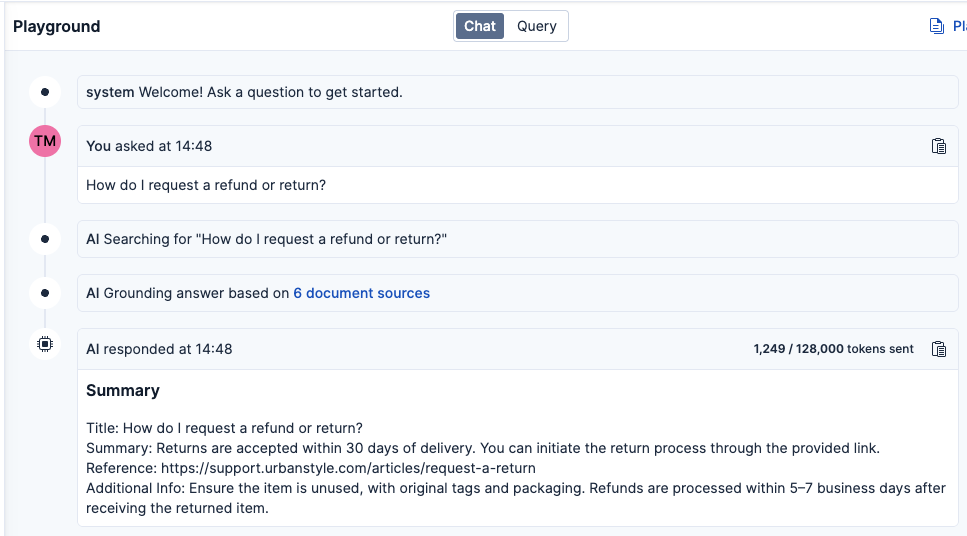

We can start asking questions like:

- How do I request a refund or return?

- Where is my order?

Now if we ask something that is not part of the knowledge base, the system works as expected and defers the user to contact support, as we defined in our system prompt.

Deploying the app

Now that we have tested the agent, we can create an app! We’ll design a simple one in Streamlit that allows us to recommend solutions related to a set of problems/categories. If the answer is not in there, the user can chat with an agent. In this example, the agent will be an AI, but it could also be a person.

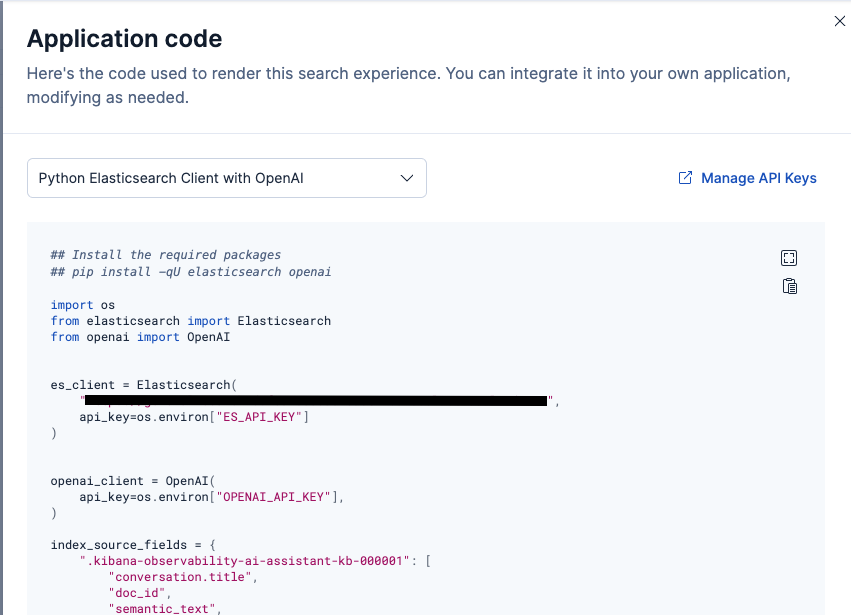

We’ll take the code provided by Playground and put it in a Streamlit app to make it interactive.

The most relevant methods are:

- get_elasticsearch_results: runs an Elasticsearch query with the user’s question.

- create_openai_prompt: gets the search results and adds them to the already defined prompt.

- generate_openai_completion: calls OpenAI with our prompt and gets the answer back.

After adding some Streamlit components around the code provided by Playground, it should look like this:

You can access the app source code here.

Conclusion

In this article, we learned how to deploy a full app with case deflection in just a couple of minutes, with an app open to users and a UI to update the agent’s knowledge as needed.

A next step would be adding customization for each user using two possible strategies:

- Add to the prompt the cases already resolved by the users (besides similar interactions from other users).

- Add a customization layer where the Elasticsearch query is modified based on the user’s preferences, thus prioritizing the most relevant articles for them.

This experimental approach opens the door to developing strong agents that are also easily maintained via UI.

Ready to try this out on your own? Start a free trial.

Want to get Elastic certified? Find out when the next Elasticsearch Engineer training is running!

Related content

October 30, 2025

Context engineering using Mistral Chat completions in Elasticsearch

Learn how to utilize context engineering with Mistral Chat completions in Elasticsearch to ground LLM responses in domain-specific knowledge for accurate outputs.

October 27, 2025

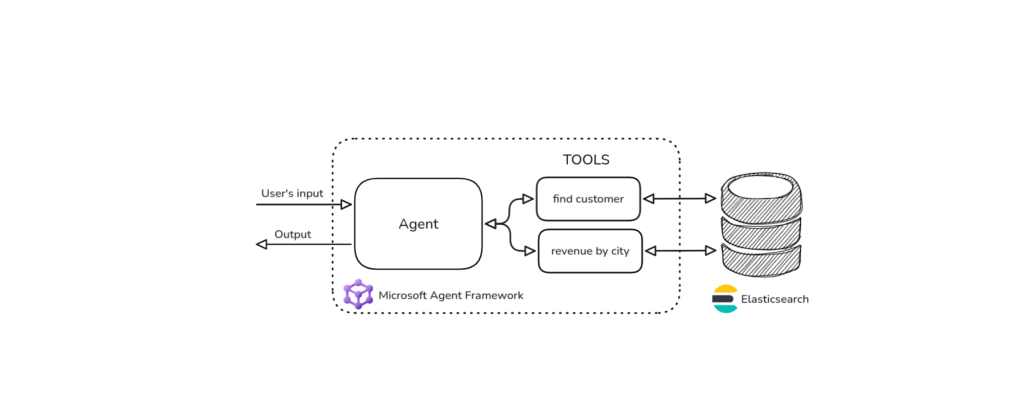

Building agentic applications with Elasticsearch and Microsoft’s Agent Framework

Learn how to use Microsoft Agent Framework with Elasticsearch to build an agentic application that extracts ecommerce data from Elasticsearch client libraries using ES|QL.

October 21, 2025

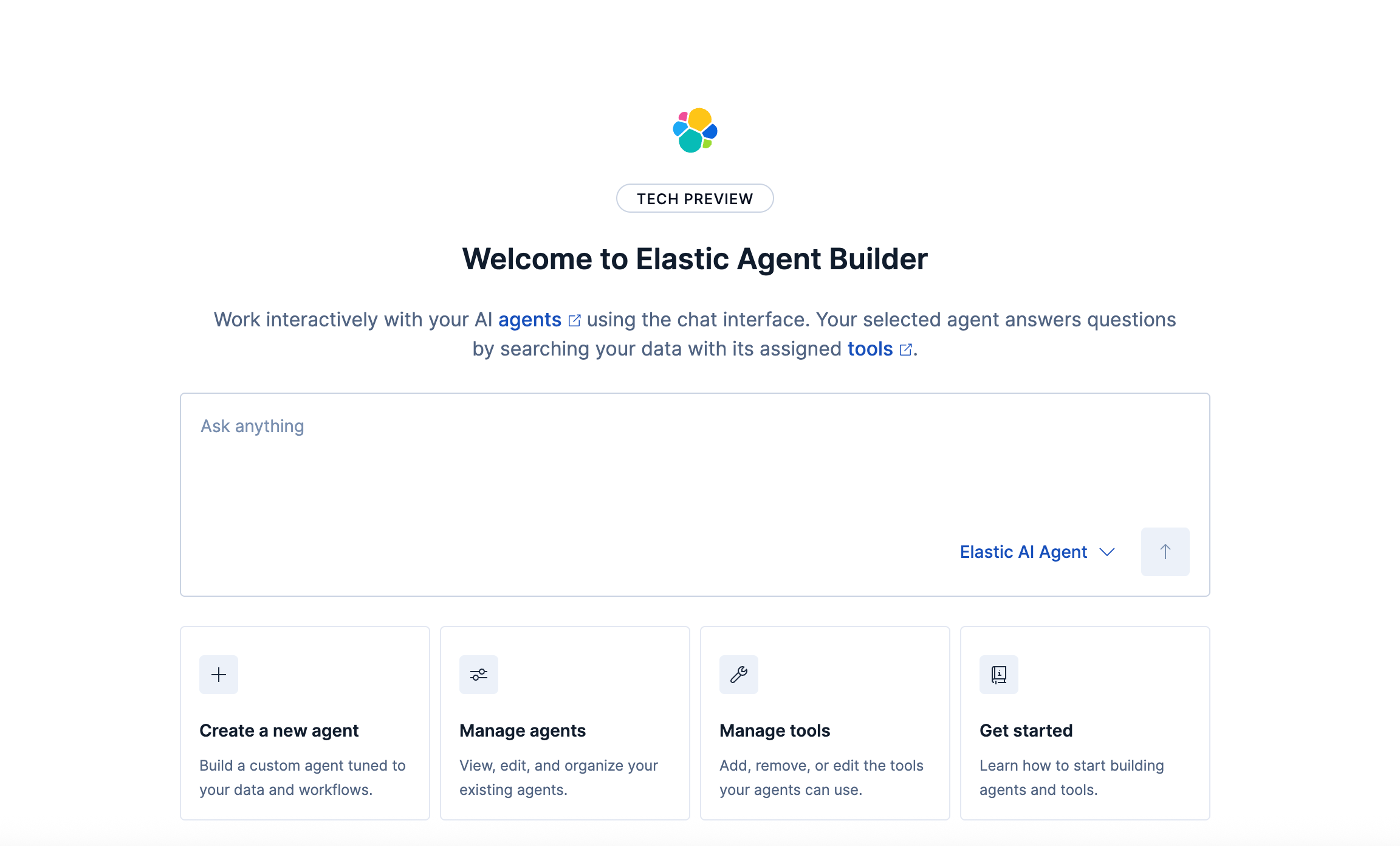

Introducing Elastic’s Agent Builder

Introducing Elastic Agent Builder, a framework to easily build reliable, context-driven AI agents in Elasticsearch with your data.

October 20, 2025

Elastic MCP server: Expose Agent Builder tools to any AI agent

Discover how to use the built-in Elastic MCP server in Agent Builder to securely extend any AI agent with access to your private data and custom tools.

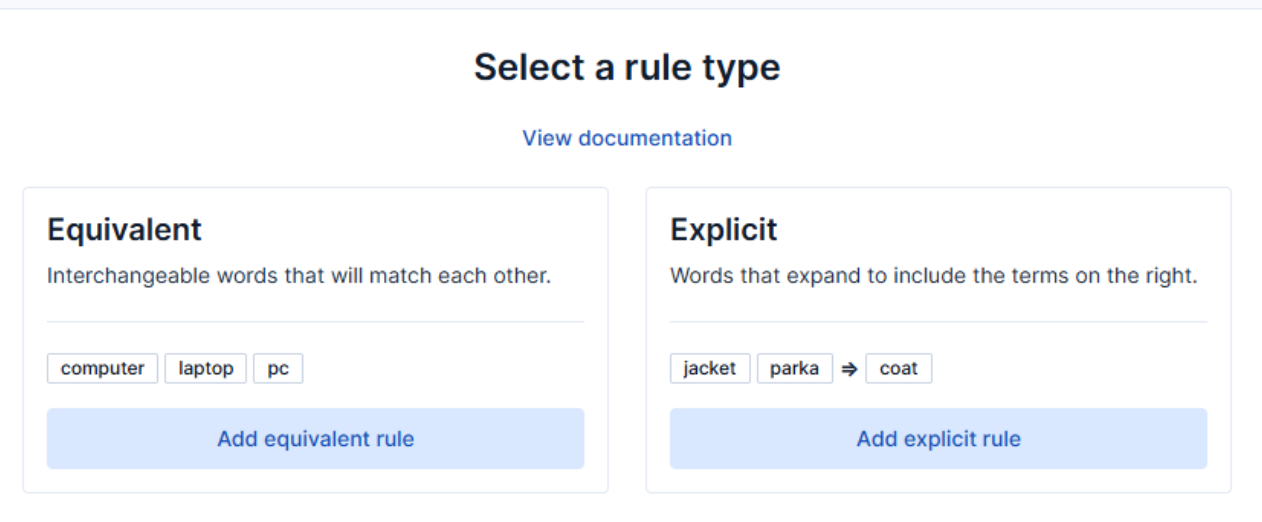

How to use the Synonyms UI to upload and manage Elasticsearch synonyms

Learn how to use the Synonyms UI in Kibana to create synonym sets and assign them to indices.