We are excited to announce our latest addition to the Elasticsearch open inference API: the integration of Google’s Gemini models through Google Cloud’s Vertex AI platform!

Elasticsearch open inference chat completion API provides a standardized, feature-rich, and familiar way to access large language models. With this, Elasticsearch developers can use large language models, such as Gemini 2.5 Pro, to build GenAI applications with use cases including question answering, summarization, and text generation.

Now, they can use their Google Cloud account for chat completion tasks, store summarizations, utilize Google’s Gemini models in ES|QL or directly through the inference API, and take advantage of Elasticsearch’s comprehensive AI search tooling and proven vector database capabilities.

Using Google’s Gemini models to answer questions and generate tool calls

In this blog, we’ll demonstrate how to use Google’s Gemini models to answer questions about data stored in Elasticsearch using ES|QL. Before we start interacting with Elasticsearch, ensure you have a Google Cloud Vertex AI account and the necessary keys handy. We’ll need them when configuring the Inference Endpoint.

Creating a Vertex AI Service Account and retrieving the keys

1. Go to https://console.cloud.google.com/iam-admin/serviceaccounts

2. Click the button Create service account.

- Write a name that is suitable for you.

- Click Create and continue.

- Grant the role to the Vertex AI User.

- Click Add another role and then grant the role Service account token creator. This role is needed to allow the SA to generate the necessary access tokens.

- Click Done.

3. Go to https://console.cloud.google.com/iam-admin/serviceaccounts and click on the SA just created.

4. Go to the keys tab and click Add key -> Create new key -> JSON -> Click on Create.

- If you get an error message Service account key creation is disabled your administrator needs to change the organization policy

iam.disableServiceAccountKeyCreationor grant an exception.

5. The service account keys should be downloaded automatically.

Now that we’ve downloaded the service account key, let’s format it before we use it to create the Inference Endpoint. The following sed command will escape the quotes and remove newlines.

sed 's/"/\\"/g' <path to your service account file> | tr -d "\n"

Setting up the Inference Endpoint and interacting with Google’s Gemini models

We’ll use Kibana’s Console to execute these next steps in Elasticsearch without needing to set up an IDE.

First, we configure an inference endpoint, which will interact with Gemini:

PUT _inference/chat_completion/gemini_chat_completion

{

"service": "googlevertexai",

"service_settings": {

"service_account_json": "<output string from the sed command>",

"model_id": "gemini-2.5-pro",

"location": "us-central1",

"project_id": "<project id>"

}

}We’ll get back a response similar to the following with status code 200 OK on successful inference endpoint creation:

{

"inference_id": "gemini_chat_completion",

"task_type": "chat_completion",

"service": "googlevertexai",

"service_settings": {

"project_id": "<project id>",

"location": "us-central1",

"model_id": "gemini-2.5-pro",

"rate_limit": {

"requests_per_minute": 1000

}

}

}We can now call the configured endpoint to perform chat completion on any text input and receive the response in real-time. Let’s ask Gemini for a short description of ES|QL:

POST _inference/chat_completion/gemini_chat_completion/_stream

{

"messages": [

{

"role": "user",

"content": "What is a short one line description of Elastic ES|QL?"

}

]

}We should get a streamed response back with a status code 200 OK providing a short description of ES|QL:

event: message

data: {"id":"VvZ3aOSwMpu3nvgPoLfSgQU","choices":[{"delta":{"content":"ES|QL is a powerful, piped query language used","role":"model"},"index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk"}

event: message

data: {"id":"VvZ3aOSwMpu3nvgPoLfSgQU","choices":[{"delta":{"content":" to search, transform, and aggregate data in Elasticsearch in a single, sequential query.","role":"model"},"finish_reason":"STOP","index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk","usage":{"completion_tokens":28,"prompt_tokens":13,"total_tokens":1469}}

event: message

data: [DONE]The Inference Chat Completion API supports tool calling, allowing us to interact with Google’s Gemini models hosted on the Vertex AI platform in a robust manner to retrieve information about our data.

We’ll ask Gemini to write an ES|QL query to retrieve documents that contain a failure status code in the response field and summarize the results. The response field may not be mapped as an integer, so we’ll need Gemini to retrieve the mapping for us and adjust the query appropriately.

We’ll pretend we have a tool implemented that Gemini can interact with. For this demonstration, we’ll perform the tool call ourselves in the Dev Console.

Initial request:

POST _inference/chat_completion/gemini_chat_completion/_stream

{

"messages": [

{

"role": "user",

"content": "You are an expert in ES|QL, the query language for Elasticsearch. I have added the sample data from https://www.elastic.co/docs/manage-data/ingest/sample-data. The index is called kibana_sample_data_logs. Can you retrieve the mapping, generate and execute an ES|QL query to retrieve the most recent two web logs with a failure status code for the response field? To retrieve the most recent logs please use SORT on the @timestamp field. After retrieving the logs, please summarize the results in a concise manner. The response field may not be mapped as an integer, so be sure to handle it correctly."

}

],

"tools": [

{

"type": "function",

"function": {

"name": "retrieve_index_mapping",

"description": "Retrieves the mapping for an Elasticsearch index.",

"parameters": {

"type": "object",

"properties": {

"index_name": {

"type": "string",

"description": "The name of the index to retrieve the mapping for."

}

},

"required": [

"index_name"

]

}

}

},

{

"type": "function",

"function": {

"name": "execute_esql_query",

"description": "Executes an ES|QL query against an Elasticsearch index.",

"parameters": {

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "The ES|QL query to execute."

}

},

"required": [

"query"

]

}

}

}

],

"tool_choice": "auto",

"temperature": 0.7

}Gemini responds with a tool call to retrieve the mapping of the index kibana_sample_data_logs:

event: message

data: {"id":"agZ4aP6OGZapnvgPtbCBkQU","choices":[{"delta":{"role":"model","tool_calls":[{"index":0,"id":"retrieve_index_mapping","function":{"arguments":"{\"index_name\":\"kibana_sample_data_logs\"}","name":"retrieve_index_mapping"},"type":"function"}]},"finish_reason":"STOP","index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk","usage":{"completion_tokens":16,"prompt_tokens":182,"total_tokens":663}}

event: message

data: [DONE]To simulate the tool call, we’ll retrieve the mapping using the following command:

GET kibana_sample_data_logs/_mappingTo finish simulating the tool call, we’ll include the response in the next request to Gemini:

Request with mapping result:

POST _inference/chat_completion/gemini_chat_completion/_stream

{

"messages": [

{

"role": "user",

"content": "You are an expert in ES|QL, the query language for Elasticsearch. I have added the sample data from https://www.elastic.co/docs/manage-data/ingest/sample-data. The index is called kibana_sample_data_logs. Can you retrieve the mapping, generate and execute an ES|QL query to retrieve the most recent two web logs with a failure status code for the response field? To retrieve the most recent logs please use SORT on the @timestamp field. After retrieving the logs, please summarize the results in a concise manner. The response field may not be mapped as an integer, so be sure to handle it correctly."

},

{

"role": "assistant",

"content": "",

"tool_calls": [

{

"function": {

"name": "retrieve_index_mapping",

"arguments": "{\"index_name\":\"kibana_sample_data_logs\"}"

},

"id": "1",

"type": "function"

}

]

},

{

"role": "tool",

"content": "{\".ds-kibana_sample_data_logs-2025.07.16-000001\":{\"mappings\":{\"_data_stream_timestamp\":{\"enabled\":true},\"properties\":{\"@timestamp\":{\"type\":\"date\"},\"agent\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"bytes\":{\"type\":\"long\"},\"bytes_counter\":{\"type\":\"long\",\"time_series_metric\":\"counter\"},\"bytes_gauge\":{\"type\":\"long\",\"time_series_metric\":\"gauge\"},\"clientip\":{\"type\":\"ip\"},\"event\":{\"properties\":{\"dataset\":{\"type\":\"keyword\"}}},\"extension\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"geo\":{\"properties\":{\"coordinates\":{\"type\":\"geo_point\"},\"dest\":{\"type\":\"keyword\"},\"src\":{\"type\":\"keyword\"},\"srcdest\":{\"type\":\"keyword\"}}},\"host\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"index\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"ip\":{\"type\":\"ip\"},\"ip_range\":{\"type\":\"ip_range\"},\"machine\":{\"properties\":{\"os\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"ram\":{\"type\":\"long\"}}},\"memory\":{\"type\":\"double\"},\"message\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"phpmemory\":{\"type\":\"long\"},\"referer\":{\"type\":\"keyword\"},\"request\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"time_series_dimension\":true}}},\"response\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"tags\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"timestamp\":{\"type\":\"alias\",\"path\":\"@timestamp\"},\"timestamp_range\":{\"type\":\"date_range\"},\"url\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"utc_time\":{\"type\":\"date\"}}}}}",

"tool_call_id": "1"

}

],

"tools": [

{

"type": "function",

"function": {

"name": "retrieve_index_mapping",

"description": "Retrieves the mapping for an Elasticsearch index.",

"parameters": {

"type": "object",

"properties": {

"index_name": {

"type": "string",

"description": "The name of the index to retrieve the mapping for."

}

},

"required": [

"index_name"

]

}

}

},

{

"type": "function",

"function": {

"name": "execute_esql_query",

"description": "Executes an ES|QL query against an Elasticsearch index.",

"parameters": {

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "The ES|QL query to execute."

}

},

"required": [

"query"

]

}

}

}

],

"tool_choice": "auto",

"temperature": 0.7

}Now that Gemini has the mapping, it responds with a tool call to execute a query to retrieve the last two web logs:

event: message

data: {"id":"3AZ4aMfXLJapnvgPtbCBkQU","choices":[{"delta":{"content":"\n","role":"model"},"index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk"}

event: message

data: {"id":"3AZ4aMfXLJapnvgPtbCBkQU","choices":[{"delta":{"role":"model","tool_calls":[{"index":0,"id":"execute_esql_query","function":{"arguments":"{\"query\":\"FROM kibana_sample_data_logs WHERE TO_INT(response) >= 400 ORDER BY \\\"@timestamp\\\" DESC LIMIT 2\"}","name":"execute_esql_query"},"type":"function"}]},"finish_reason":"STOP","index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk","usage":{"completion_tokens":38,"prompt_tokens":665,"total_tokens":1652}}

event: message

data: [DONE]If you look closely, you’ll notice that the syntax is missing pipe characters before the different clauses. Our pretend tool call will simulate Kibana and return an error, allowing us to see how Gemini handles the issue. Kibana’s ES|QL query console would respond with this error:

[esql] > Couldn't parse Elasticsearch ES|QL query. Check your query and try again. Error: line 1:30: mismatched input 'WHERE' expecting {<EOF>, '|', ',', 'metadata'}Let’s include that in the response for the execute_esql_query.

Request with error:

POST _inference/chat_completion/gemini_chat_completion/_stream

{

"messages": [

{

"role": "user",

"content": "You are an expert in ES|QL, the query language for Elasticsearch. I have added the sample data from https://www.elastic.co/docs/manage-data/ingest/sample-data. The index is called kibana_sample_data_logs. Can you retrieve the mapping, generate and execute an ES|QL query to retrieve the most recent two web logs with a failure status code for the response field? To retrieve the most recent logs please use SORT on the @timestamp field. After retrieving the logs, please summarize the results in a concise manner. The response field may not be mapped as an integer, so be sure to handle it correctly."

},

{

"role": "assistant",

"content": "",

"tool_calls": [

{

"function": {

"name": "retrieve_index_mapping",

"arguments": "{\"index_name\":\"kibana_sample_data_logs\"}"

},

"id": "1",

"type": "function"

}

]

},

{

"role": "tool",

"content": "{\".ds-kibana_sample_data_logs-2025.07.16-000001\":{\"mappings\":{\"_data_stream_timestamp\":{\"enabled\":true},\"properties\":{\"@timestamp\":{\"type\":\"date\"},\"agent\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"bytes\":{\"type\":\"long\"},\"bytes_counter\":{\"type\":\"long\",\"time_series_metric\":\"counter\"},\"bytes_gauge\":{\"type\":\"long\",\"time_series_metric\":\"gauge\"},\"clientip\":{\"type\":\"ip\"},\"event\":{\"properties\":{\"dataset\":{\"type\":\"keyword\"}}},\"extension\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"geo\":{\"properties\":{\"coordinates\":{\"type\":\"geo_point\"},\"dest\":{\"type\":\"keyword\"},\"src\":{\"type\":\"keyword\"},\"srcdest\":{\"type\":\"keyword\"}}},\"host\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"index\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"ip\":{\"type\":\"ip\"},\"ip_range\":{\"type\":\"ip_range\"},\"machine\":{\"properties\":{\"os\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"ram\":{\"type\":\"long\"}}},\"memory\":{\"type\":\"double\"},\"message\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"phpmemory\":{\"type\":\"long\"},\"referer\":{\"type\":\"keyword\"},\"request\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"time_series_dimension\":true}}},\"response\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"tags\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"timestamp\":{\"type\":\"alias\",\"path\":\"@timestamp\"},\"timestamp_range\":{\"type\":\"date_range\"},\"url\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"utc_time\":{\"type\":\"date\"}}}}}",

"tool_call_id": "1"

},

{

"role": "assistant",

"content": "",

"tool_calls": [

{

"function": {

"name": "execute_esql_query",

"arguments": "{\"query\":\"FROM kibana_sample_data_logs WHERE TO_INT(response) >= 400 ORDER BY \\\"@timestamp\\\" DESC LIMIT 2\"}"

},

"id": "2",

"type": "function"

}

]

},

{

"role": "tool",

"content": "[esql] > Couldn't parse Elasticsearch ES|QL query. Check your query and try again. Error: line 1:30: mismatched input 'WHERE' expecting {<EOF>, '|', ',', 'metadata'}",

"tool_call_id": "2"

}

],

"tools": [

{

"type": "function",

"function": {

"name": "retrieve_index_mapping",

"description": "Retrieves the mapping for an Elasticsearch index.",

"parameters": {

"type": "object",

"properties": {

"index_name": {

"type": "string",

"description": "The name of the index to retrieve the mapping for."

}

},

"required": [

"index_name"

]

}

}

},

{

"type": "function",

"function": {

"name": "execute_esql_query",

"description": "Executes an ES|QL query against an Elasticsearch index.",

"parameters": {

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "The ES|QL query to execute."

}

},

"required": [

"query"

]

}

}

}

],

"tool_choice": "auto",

"temperature": 0.7

}Gemini is able to identify the mistake and produce the correct query:

event: message

data: {"id":"7gh4aPLAMvzcnvgP1q6EgQw","choices":[{"delta":{"content":"\n","role":"model"},"index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk"}

event: message

data: {"id":"7gh4aPLAMvzcnvgP1q6EgQw","choices":[{"delta":{"content":"I","role":"model"},"index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk"}

event: message

data: {"id":"7gh4aPLAMvzcnvgP1q6EgQw","choices":[{"delta":{"content":"'m sorry, it seems I made a mistake in the previous ES|QL query. Let me correct it and try again. The different parts of the query should be separated by a pipe `|` character.","role":"model"},"index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk"}

event: message

data: {"id":"7gh4aPLAMvzcnvgP1q6EgQw","choices":[{"delta":{"role":"model","tool_calls":[{"index":0,"id":"execute_esql_query","function":{"arguments":"{\"query\":\"FROM kibana_sample_data_logs | WHERE TO_INT(response) >= 400 | ORDER BY \\\"@timestamp\\\" DESC | LIMIT 2\"}","name":"execute_esql_query"},"type":"function"}]},"finish_reason":"STOP","index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk","usage":{"completion_tokens":85,"prompt_tokens":750,"total_tokens":1620}}

event: message

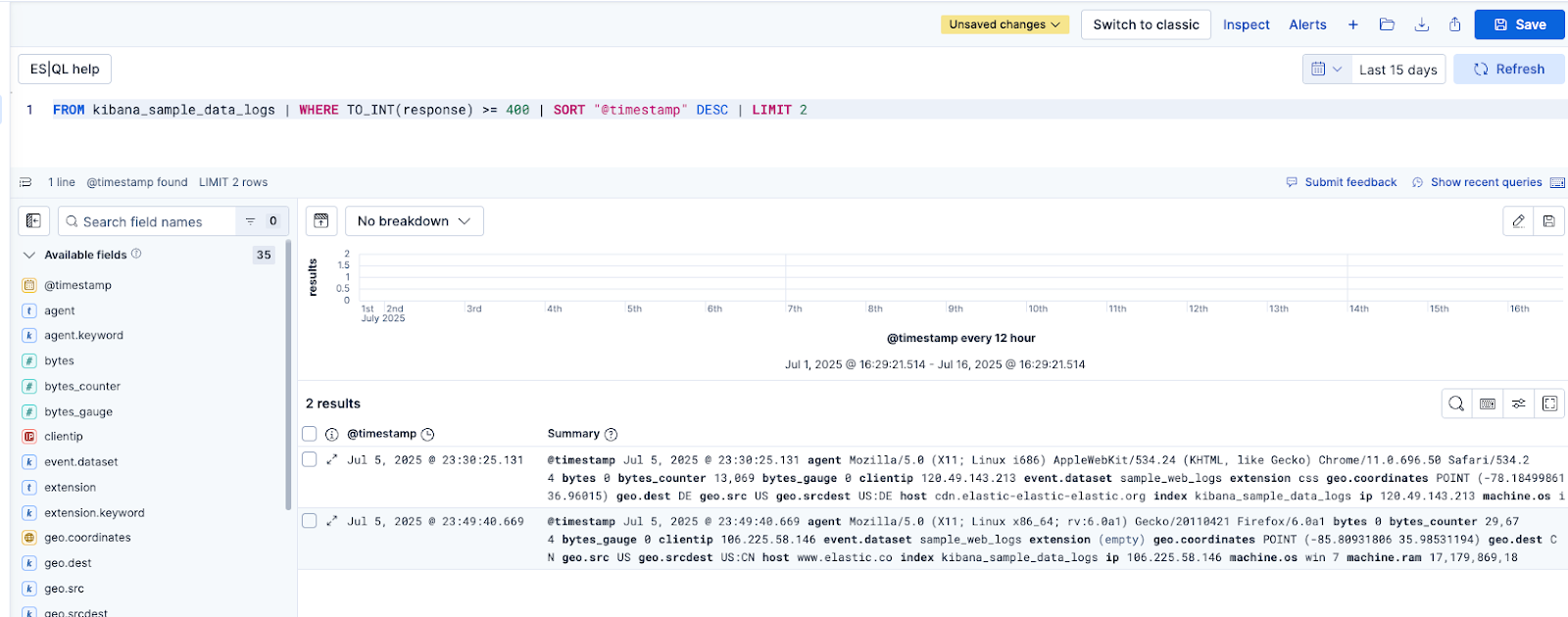

data: [DONE]Our next message will include the results from executing the correctly formatted query in Kibana. To retrieve the documents, we can either execute the query directly in Discover and copy the document contents or execute the query in Dev Tools. Let’s pretend our tool implementation used Discover and retrieved the full source for the documents.

Request with document results:

POST _inference/chat_completion/gemini_chat_completion/_stream

{

"messages": [

{

"role": "user",

"content": "You are an expert in ES|QL, the query language for Elasticsearch. I have added the sample data from https://www.elastic.co/docs/manage-data/ingest/sample-data. The index is called kibana_sample_data_logs. Can you retrieve the mapping, generate and execute an ES|QL query to retrieve the most recent two web logs with a failure status code for the response field? To retrieve the most recent logs please use SORT on the @timestamp field. After retrieving the logs, please summarize the results in a concise manner. The response field may not be mapped as an integer, so be sure to handle it correctly."

},

{

"role": "assistant",

"content": "",

"tool_calls": [

{

"function": {

"name": "retrieve_index_mapping",

"arguments": "{\"index_name\":\"kibana_sample_data_logs\"}"

},

"id": "1",

"type": "function"

}

]

},

{

"role": "tool",

"content": "{\".ds-kibana_sample_data_logs-2025.07.16-000001\":{\"mappings\":{\"_data_stream_timestamp\":{\"enabled\":true},\"properties\":{\"@timestamp\":{\"type\":\"date\"},\"agent\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"bytes\":{\"type\":\"long\"},\"bytes_counter\":{\"type\":\"long\",\"time_series_metric\":\"counter\"},\"bytes_gauge\":{\"type\":\"long\",\"time_series_metric\":\"gauge\"},\"clientip\":{\"type\":\"ip\"},\"event\":{\"properties\":{\"dataset\":{\"type\":\"keyword\"}}},\"extension\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"geo\":{\"properties\":{\"coordinates\":{\"type\":\"geo_point\"},\"dest\":{\"type\":\"keyword\"},\"src\":{\"type\":\"keyword\"},\"srcdest\":{\"type\":\"keyword\"}}},\"host\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"index\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"ip\":{\"type\":\"ip\"},\"ip_range\":{\"type\":\"ip_range\"},\"machine\":{\"properties\":{\"os\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"ram\":{\"type\":\"long\"}}},\"memory\":{\"type\":\"double\"},\"message\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"phpmemory\":{\"type\":\"long\"},\"referer\":{\"type\":\"keyword\"},\"request\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"time_series_dimension\":true}}},\"response\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"tags\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"timestamp\":{\"type\":\"alias\",\"path\":\"@timestamp\"},\"timestamp_range\":{\"type\":\"date_range\"},\"url\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"utc_time\":{\"type\":\"date\"}}}}}",

"tool_call_id": "1"

},

{

"role": "assistant",

"content": "",

"tool_calls": [

{

"function": {

"name": "execute_esql_query",

"arguments": "{\"query\":\"FROM kibana_sample_data_logs WHERE TO_INT(response) >= 400 SORT \\\"@timestamp\\\" DESC LIMIT 2\"}"

},

"id": "2",

"type": "function"

}

]

},

{

"role": "tool",

"content": "[esql] > Couldn't parse Elasticsearch ES|QL query. Check your query and try again. Error: line 1:30: mismatched input 'WHERE' expecting {<EOF>, '|', ',', 'metadata'}",

"tool_call_id": "2"

},

{

"role": "assistant",

"content": "",

"tool_calls": [

{

"function": {

"name": "execute_esql_query",

"arguments": "{\"query\":\"FROM kibana_sample_data_logs | WHERE TO_INT(response) >= 400 | SORT \\\"@timestamp\\\" DESC | LIMIT 2\"}"

},

"id": "3",

"type": "function"

}

]

},

{

"role": "tool",

"content": "[{\"@timestamp\":\"2025-07-06T03:30:25.131Z\",\"agent\":\"Mozilla/5.0 (X11; Linux i686) AppleWebKit/534.24 (KHTML, like Gecko) Chrome/11.0.696.50 Safari/534.24\",\"agent.keyword\":\"Mozilla/5.0 (X11; Linux i686) AppleWebKit/534.24 (KHTML, like Gecko) Chrome/11.0.696.50 Safari/534.24\",\"bytes\":0,\"bytes_counter\":13069,\"bytes_gauge\":0,\"clientip\":\"120.49.143.213\",\"event.dataset\":\"sample_web_logs\",\"extension\":\"css\",\"extension.keyword\":\"css\",\"geo.coordinates\":\"POINT (-78.18499861 36.96015)\",\"geo.dest\":\"DE\",\"geo.src\":\"US\",\"geo.srcdest\":\"US:DE\",\"host\":\"cdn.elastic-elastic-elastic.org\",\"host.keyword\":\"cdn.elastic-elastic-elastic.org\",\"index\":\"kibana_sample_data_logs\",\"index.keyword\":\"kibana_sample_data_logs\",\"ip\":\"120.49.143.213\",\"machine.os\":\"ios\",\"machine.os.keyword\":\"ios\",\"machine.ram\":20401094656,\"message\":\"120.49.143.213 - - [2018-07-22T03:30:25.131Z] \\\"GET /styles/main.css_1 HTTP/1.1\\\" 503 0 \\\"-\\\" \\\"Mozilla/5.0 (X11; Linux i686) AppleWebKit/534.24 (KHTML, like Gecko) Chrome/11.0.696.50 Safari/534.24\\\"\",\"message.keyword\":\"120.49.143.213 - - [2018-07-22T03:30:25.131Z] \\\"GET /styles/main.css_1 HTTP/1.1\\\" 503 0 \\\"-\\\" \\\"Mozilla/5.0 (X11; Linux i686) AppleWebKit/534.24 (KHTML, like Gecko) Chrome/11.0.696.50 Safari/534.24\\\"\",\"referer\":\"http://twitter.com/success/konstantin-feoktistov\",\"request\":\"/styles/main.css\",\"request.keyword\":\"/styles/main.css\",\"response\":\"503\",\"response.keyword\":\"503\",\"tags\":[\"success\",\"login\"],\"tags.keyword\":[\"login\",\"success\"],\"timestamp\":\"2025-07-06T03:30:25.131Z\",\"url\":\"https://cdn.elastic-elastic-elastic.org/styles/main.css_1\",\"url.keyword\":\"https://cdn.elastic-elastic-elastic.org/styles/main.css_1\",\"utc_time\":\"2025-07-06T03:30:25.131Z\"},{\"@timestamp\":\"2025-07-06T03:49:40.669Z\",\"agent\":\"Mozilla/5.0 (X11; Linux x86_64; rv:6.0a1) Gecko/20110421 Firefox/6.0a1\",\"agent.keyword\":\"Mozilla/5.0 (X11; Linux x86_64; rv:6.0a1) Gecko/20110421 Firefox/6.0a1\",\"bytes\":0,\"bytes_counter\":29674,\"bytes_gauge\":0,\"clientip\":\"106.225.58.146\",\"event.dataset\":\"sample_web_logs\",\"extension\":\"\",\"extension.keyword\":\"\",\"geo.coordinates\":\"POINT (-85.80931806 35.98531194)\",\"geo.dest\":\"CN\",\"geo.src\":\"US\",\"geo.srcdest\":\"US:CN\",\"host\":\"www.elastic.co\",\"host.keyword\":\"www.elastic.co\",\"index\":\"kibana_sample_data_logs\",\"index.keyword\":\"kibana_sample_data_logs\",\"ip\":\"106.225.58.146\",\"machine.os\":\"win 7\",\"machine.os.keyword\":\"win 7\",\"machine.ram\":17179869184,\"message\":\"106.225.58.146 - - [2018-07-22T03:49:40.669Z] \\\"GET /apm_1 HTTP/1.1\\\" 503 0 \\\"-\\\" \\\"Mozilla/5.0 (X11; Linux x86_64; rv:6.0a1) Gecko/20110421 Firefox/6.0a1\\\"\",\"message.keyword\":\"106.225.58.146 - - [2018-07-22T03:49:40.669Z] \\\"GET /apm_1 HTTP/1.1\\\" 503 0 \\\"-\\\" \\\"Mozilla/5.0 (X11; Linux x86_64; rv:6.0a1) Gecko/20110421 Firefox/6.0a1\\\"\",\"referer\":\"http://www.elastic-elastic-elastic.com/success/richard-o-covey\",\"request\":\"/apm\",\"request.keyword\":\"/apm\",\"response\":\"503\",\"response.keyword\":\"503\",\"tags\":[\"success\",\"security\"],\"tags.keyword\":[\"security\",\"success\"],\"timestamp\":\"2025-07-06T03:49:40.669Z\",\"url\":\"https://www.elastic.co/downloads/apm_1\",\"url.keyword\":\"https://www.elastic.co/downloads/apm_1\",\"utc_time\":\"2025-07-06T03:49:40.669Z\"}]",

"tool_call_id": "3"

}

],

"tools": [

{

"type": "function",

"function": {

"name": "retrieve_index_mapping",

"description": "Retrieves the mapping for an Elasticsearch index.",

"parameters": {

"type": "object",

"properties": {

"index_name": {

"type": "string",

"description": "The name of the index to retrieve the mapping for."

}

},

"required": [

"index_name"

]

}

}

},

{

"type": "function",

"function": {

"name": "execute_esql_query",

"description": "Executes an ES|QL query against an Elasticsearch index.",

"parameters": {

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "The ES|QL query to execute."

}

},

"required": [

"query"

]

}

}

}

],

"tool_choice": "auto",

"temperature": 0.7

}Gemini’s last response reiterates what the conversation has covered so far and summarizes the two retrieved documents, just like we asked:

Here is the response summarizing the results:

event: message

data: {"id":"5Qx4aKerCZj3ld8PnuzrqAQ","choices":[{"delta":{"content":"\nthought\n1. **Search**: The user wants to query Elasticsearch using ES|QL. The available tool `execute_esql_query` is a perfect match for this.\n\n2. **Parameters**: The `execute_esql_query` tool requires a `query` string.\n\n3. **Query Generation**: The user wants to \"retrieve the most recent two web logs with a failure status code for the response field\". They also mention that the `response` field might not be an integer and that we should use `SORT` on the `@timestamp` field.\n\n - **FROM clause**: The index is specified as `kibana_sample_data_logs`. So, `FROM kibana_sample_data_logs`.\n - **WHERE clause**: A \"failure status code\" is typically a 4xx or 5xx code. The user also mentions the `response` field might not be an integer. The `TO_INT()` function in ES|QL can be used to cast the `response` field to an integer for numerical comparison. So, the condition will be `TO_INT(response) >= 400`.\n - **SORT clause**: The user wants the \"most recent\" logs and explicitly asks to \"use SORT on the @timestamp field\". To get the most recent, we need to sort in descending order. So, `SORT \"@timestamp\" DESC`.\n - **LIMIT clause**: The user wants the \"most recent two\" logs. So, `LIMIT 2`.\n\n4. **Initial Query Construction**: Putting it all together, the initial query is `FROM kibana_sample_data_logs WHERE TO_INT(response) >= 400 SORT \"@timestamp\" DESC LIMIT 2`.\n\n5. **Tool Call**: Call the `execute_esql_query` tool with the generated query.\n\n6. **Execution Analysis**: The first tool call `print(default_api.execute_esql_query(query = \"FROM kibana_sample_data_logs WHERE TO_INT(response) >= 400 SORT \\\"@timestamp\\\" DESC LIMIT 2\"))` fails with a parsing error: `mismatched input 'WHERE' expecting {<EOF>, '|', ',', 'metadata'}`.\n\n7. **Correction**: The error message indicates that the `WHERE` keyword is not expected after the `FROM` clause. In ES|QL, pipes (`|`) are used to separate different stages of the query. The correct syntax is to pipe the output of the `FROM` clause to the `WHERE` clause.\n\n8. **Corrected Query Construction**: The corrected query is `FROM kibana_sample_data_logs | WHERE TO_INT(response) >= 400 | SORT \"@timestamp\" DESC | LIMIT 2`.\n\n9. **Second Tool Call**: Call the `execute_esql_query` tool with the corrected query.\n\n10. **Execution Analysis**: The second tool call is successful and returns a JSON object with two log entries.\n\n11. **Summarization**: The user wants the results to be summarized \"in a concise manner\". I need to extract the key information from the returned JSON and present it to the user.\n\n - The first log has a timestamp of `2025-07-06T03:30:25.131Z`, a response code of `503`, a client IP of `120.49.143.213`, and the request was for `/styles/main.css`.\n - The second log has a timestamp of `2025-07-06T03:49:40.669Z`, a response code of `503`, a client IP of `106.225.58.146`, and the request was for `/apm`.\n\n12. **Final Response**: Combine the corrected action and the summary into a final response for the user. Acknowledge the initial error and explain the correction. Then, present the summarized results.","role":"model"},"index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk"}

event: message

data: {"id":"5Qx4aKerCZj3ld8PnuzrqAQ","choices":[{"delta":{"content":"I have retrieved the mapping for the `kibana_sample_data_logs` index and executed an ES|QL query to retrieve the two most recent web logs with a failure status code.\n\nI initially made a mistake in the ES|QL syntax,","role":"model"},"index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk"}

event: message

data: {"id":"5Qx4aKerCZj3ld8PnuzrqAQ","choices":[{"delta":{"content":" but I have corrected it and successfully retrieved the data.\n\nHere is a summary of the results:\n\nThe most recent web log with a failure status code has a timestamp of **2025-07-06T03:49:40.669Z**, a response code of","role":"model"},"index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk"}

event: message

data: {"id":"5Qx4aKerCZj3ld8PnuzrqAQ","choices":[{"delta":{"content":" **503**, a client IP of **106.225.58.146** and the request was for **/apm**.\n\nThe second most recent web log with a failure status code has a timestamp of **2025-07-06T03:30:","role":"model"},"index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk"}

event: message

data: {"id":"5Qx4aKerCZj3ld8PnuzrqAQ","choices":[{"delta":{"content":"25.131Z**, a response code of **503**, a client IP of **120.49.143.213** and the request was for **/styles/main.css**.","role":"model"},"finish_reason":"STOP","index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk","usage":{"completion_tokens":1099,"prompt_tokens":2287,"total_tokens":3386}}

event: message

data: [DONE]Using ES|QL’s Completion command to summarize results

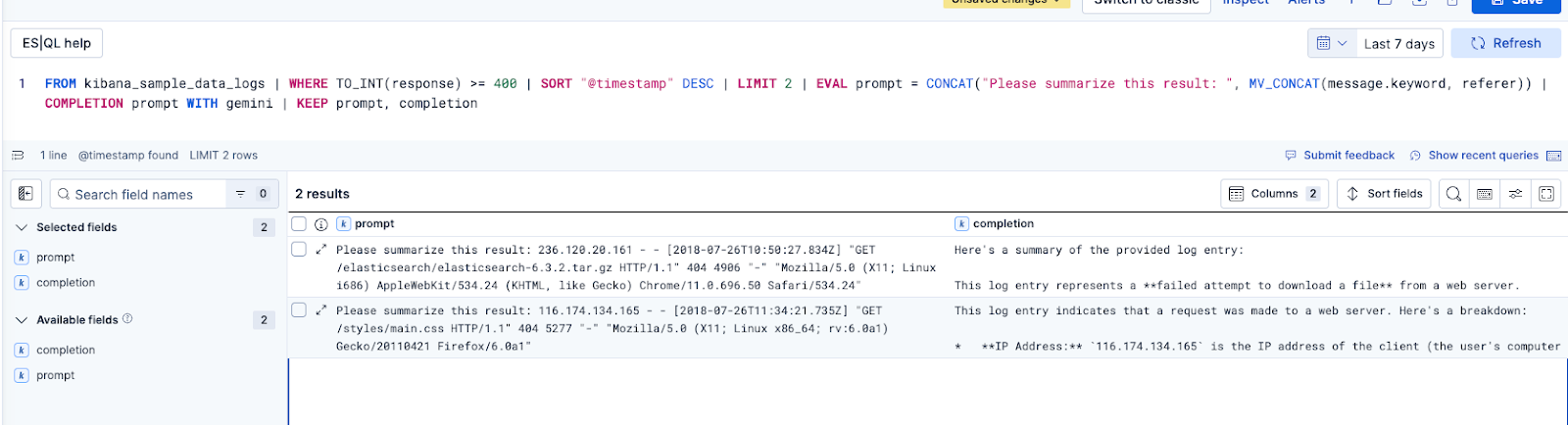

In the previous workflow, we used Google’s Gemini models to summarize the two failure web logs. We can also leverage the power of ES|QL’s Completion command to summarize the results directly in Kibana. First we need to create a new inference endpoint that uses the completion task type.

PUT _inference/completion/gemini_completion

{

"service": "googlevertexai",

"service_settings": {

"service_account_json": "<output string from the sed command>",

"model_id": "gemini-2.5-flash-lite",

"location": "us-central1",

"project_id": "<project id>"

}

}Again, we’ll get back a response similar to the following with status code 200 OK on successful inference endpoint creation:

{

"inference_id": "gemini_completion",

"task_type": "completion",

"service": "googlevertexai",

"service_settings": {

"project_id": "<project id>",

"location": "us-central1",

"model_id": "gemini-2.5-flash-lite",

"rate_limit": {

"requests_per_minute": 1000

}

}

}We can now execute a modified version of the ES|QL query that Gemini generated for us.

FROM kibana_sample_data_logs | WHERE TO_INT(response) >= 400 | SORT "@timestamp" DESC | LIMIT 2 | EVAL prompt = CONCAT("Please summarize this result: ", MV_CONCAT(message.keyword, referer)) | COMPLETION prompt WITH gemini_completion | KEEP prompt, completion

These are the modifications to the query:

EVAL prompt- This command creates a field that is sent with the text to Gemini to provide specific fields and instructions on what to do with those fields. In our example, it will ask Gemini to summarize the contents of the message and the referer fieldsCOMPLETION- This command will pass each result to the LLM to perform the task we’ve instructed it to doWITH gemini_completion- This is the inference endpoint ID we created earlier

KEEP prompt, completion- This instructs ES|QL to generate a table with the prompt we sent to Gemini and the results we received

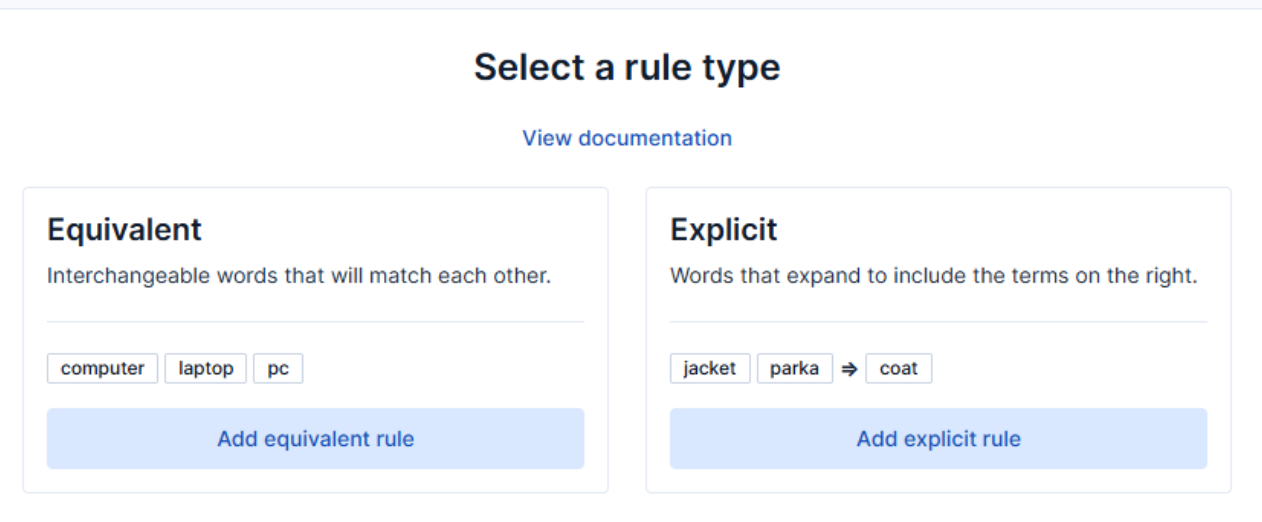

The results should look like the image below.

Wrap-up

We continue to bring state-of-the-art AI tools and providers to Elasticsearch, and we hope that you are as excited as we are about our integration with Google Cloud’s Vertex AI platform and Google’s Gemini models! Combine Google Cloud’s generative AI capabilities with Elasticsearch vector database and AI search tooling to deliver high-relevance, production-ready search and analytics experiences.

Visit Google Cloud’s Vertex AI page on Elasticsearch Labs, or try other sample notebooks on Search Labs GitHub.

To run Elasticsearch for local testing, use Docker to install and start both Elasticsearch and Kibana on your local machine with start-local:

curl -fsSL https://elastic.co/start-local | sh

Get started with your free Elastic Cloud trial or by subscribing through Google Cloud Marketplace.

Ready to try this out on your own? Start a free trial.

Want to get Elastic certified? Find out when the next Elasticsearch Engineer training is running!

Related content

October 30, 2025

Context engineering using Mistral Chat completions in Elasticsearch

Learn how to utilize context engineering with Mistral Chat completions in Elasticsearch to ground LLM responses in domain-specific knowledge for accurate outputs.

October 27, 2025

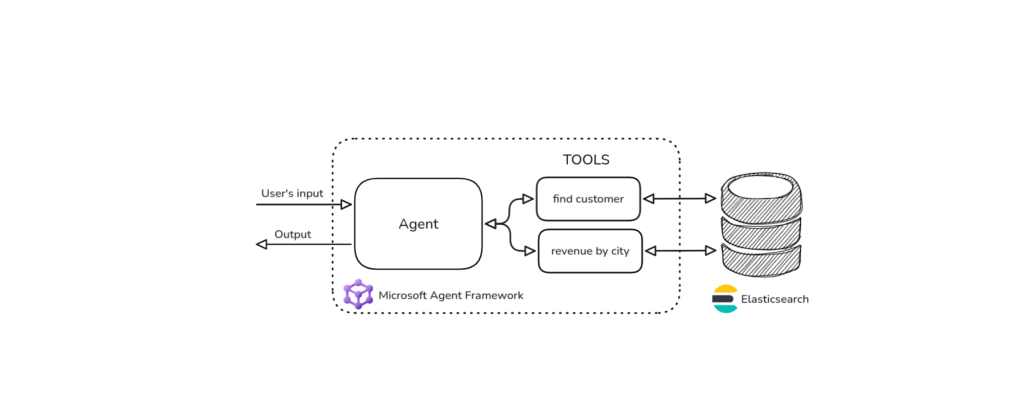

Building agentic applications with Elasticsearch and Microsoft’s Agent Framework

Learn how to use Microsoft Agent Framework with Elasticsearch to build an agentic application that extracts ecommerce data from Elasticsearch client libraries using ES|QL.

October 21, 2025

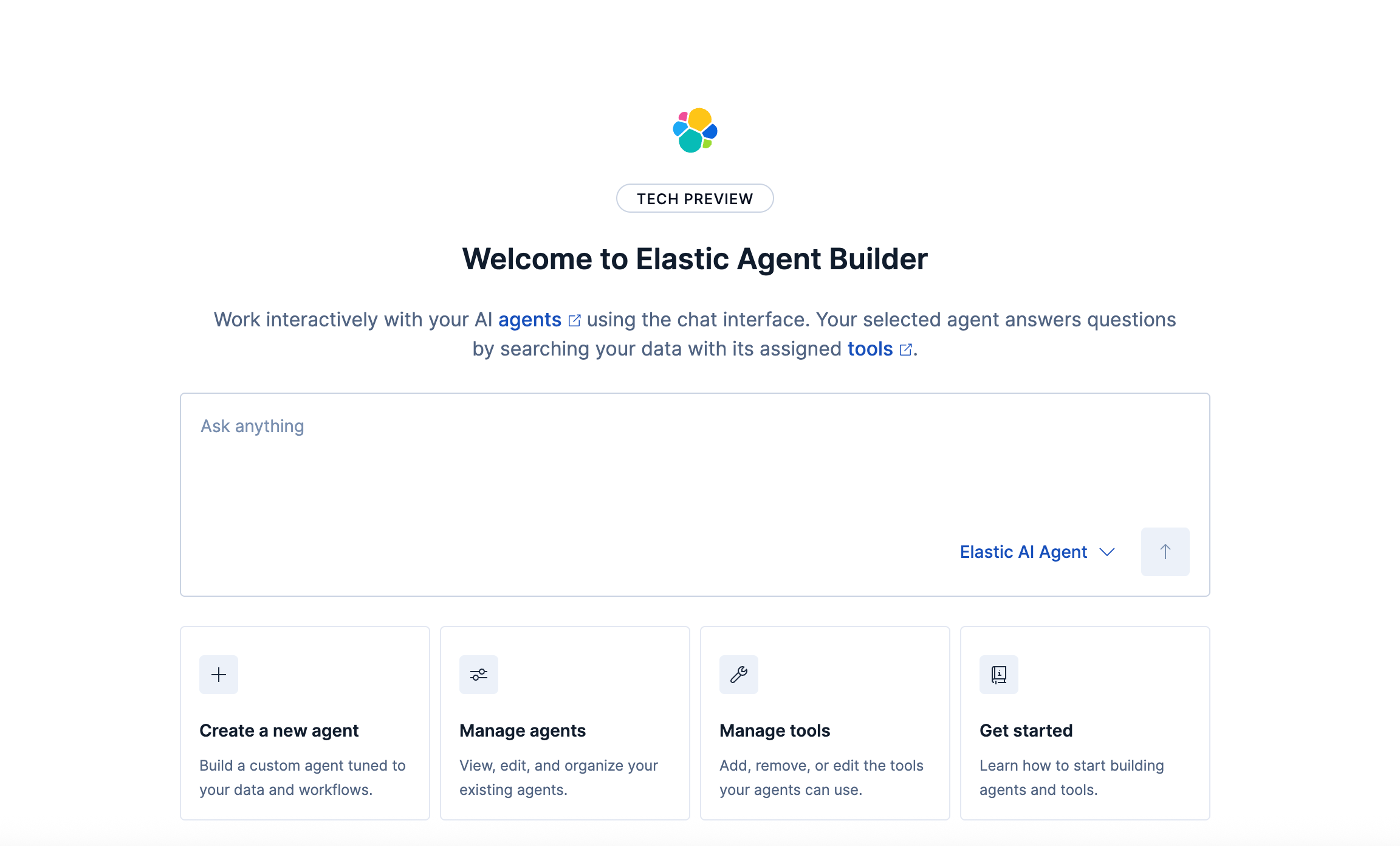

Introducing Elastic’s Agent Builder

Introducing Elastic Agent Builder, a framework to easily build reliable, context-driven AI agents in Elasticsearch with your data.

October 20, 2025

Elastic MCP server: Expose Agent Builder tools to any AI agent

Discover how to use the built-in Elastic MCP server in Agent Builder to securely extend any AI agent with access to your private data and custom tools.

How to use the Synonyms UI to upload and manage Elasticsearch synonyms

Learn how to use the Synonyms UI in Kibana to create synonym sets and assign them to indices.