The heap size is the amount of RAM allocated to the Java Virtual Machine of an Elasticsearch node.

As of version 7.11, Elasticsearch by default automatically sets the JVM heap size based on a node’s roles and total memory. Using the default sizing is recommended for most production environments. However, if you want to manually set your JVM heap size, as a general rule you should set -Xms and -Xmx to the SAME value, which should be 50% of your total available RAM subject to a maximum of (approximately) 31GB.

A higher heap size will give your node more memory for indexing and search operations. However, your node also requires memory for caching, so using 50% maintains a healthy balance between the two. For this same reason in production you should avoid using other memory intensive processes on the same node as Elasticsearch.

Typically, the heap usage will follow a saw tooth pattern, oscillating between around 30 and 70% of the maximum heap being used. This is because the JVM steadily increases heap usage percentage until the garbage collection process frees up memory again. High heap usage occurs when the garbage collection process cannot keep up. An indicator of high heap usage is when the garbage collection is incapable of reducing the heap usage to around 30%.

In the image above, you can see a normal sawtooth of JVM heap.

You will also see that there are two types of garbage collections, young and old GC.

In a healthy JVM, garbage collection should ideally meet the following conditions:

- Young GC is processed quickly (within 50 ms).

- Young GC is not frequently executed (about 10 seconds).

- Old GC is processed quickly (within 1 second).

- Old GC is not frequently executed (once per 10 minutes or more).

How to resolve when heap memory usage is too high or when JVM performance is not optimal

There can be a variety of reasons why heap memory usage can increase:

Oversharding

Please see the document on oversharding here.

Large aggregation sizes

In order to avoid large aggregation sizes, keep the number of aggregation buckets (size) in your queries to a minimum.

GET /_search

{

"aggs" : {

"products" : {

"terms" : {

"field" : "product",

"size" : 5

}

}

}

}You can use slow query logging (slow logs) and implement it on a specific index using the following.

PUT /my_index/_settings

{

"index.search.slowlog.threshold.query.warn": "10s",

"index.search.slowlog.threshold.query.info": "5s",

"index.search.slowlog.threshold.query.debug": "2s",

"index.search.slowlog.threshold.query.trace": "500ms",

"index.search.slowlog.threshold.fetch.warn": "1s",

"index.search.slowlog.threshold.fetch.info": "800ms",

"index.search.slowlog.threshold.fetch.debug": "500ms",

"index.search.slowlog.threshold.fetch.trace": "200ms",

"index.search.slowlog.level": "info"

}Queries that take a long time to return results are likely to be the resource-intensive ones.

Excessive bulk index size

If you are sending large requests, then this can be a cause of high heap consumption. Try reducing the size of the bulk index requests.

Mapping issues

In particular, if you use “fielddata: true” then this can be a major user of your JVM heap.

Heap size incorrectly set

The heap size can be manually defined by:

Setting the environment variable:

ES_JAVA_OPTS="-Xms2g -Xmx2g"Editing the jvm.options file in your Elasticsearch configuration directory:

-Xms2g

-Xmx2gThe environmental variable setting takes priority over the file setting.

It is necessary to restart the node for the setting to be taken into account.

JVM new ratio incorrectly set

It is generally NOT necessary to set this, since Elasticsearch sets this value by default. This parameter defines the ratio of space available for “new generation” and “old generation” objects in the JVM.

If you see that old GC is becoming very frequent, you can try specifically setting this value in jvm.options file in your Elasticsearch config directory.

-XX:NewRatio=3What are the best practices for managing heap size usage and JVM garbage collection in a large Elasticsearch cluster?

The best practices for managing heap size usage and JVM garbage collection in a large Elasticsearch cluster are to ensure that the heap size is set to a maximum of 50% of the available RAM, and that the JVM garbage collection settings are optimized for the specific use case. It is important to monitor the heap size and garbage collection metrics to ensure that the cluster is running optimally. Specifically, it is important to monitor the JVM heap size, garbage collection time, and garbage collection pauses. Additionally, it is important to monitor the number of garbage collection cycles and the amount of time spent in garbage collection. By monitoring these metrics, it is possible to identify any potential issues with the heap size or garbage collection settings and take corrective action if necessary.

Ready to try this out on your own? Start a free trial.

Want to get Elastic certified? Find out when the next Elasticsearch Engineer training is running!

Related content

July 16, 2025

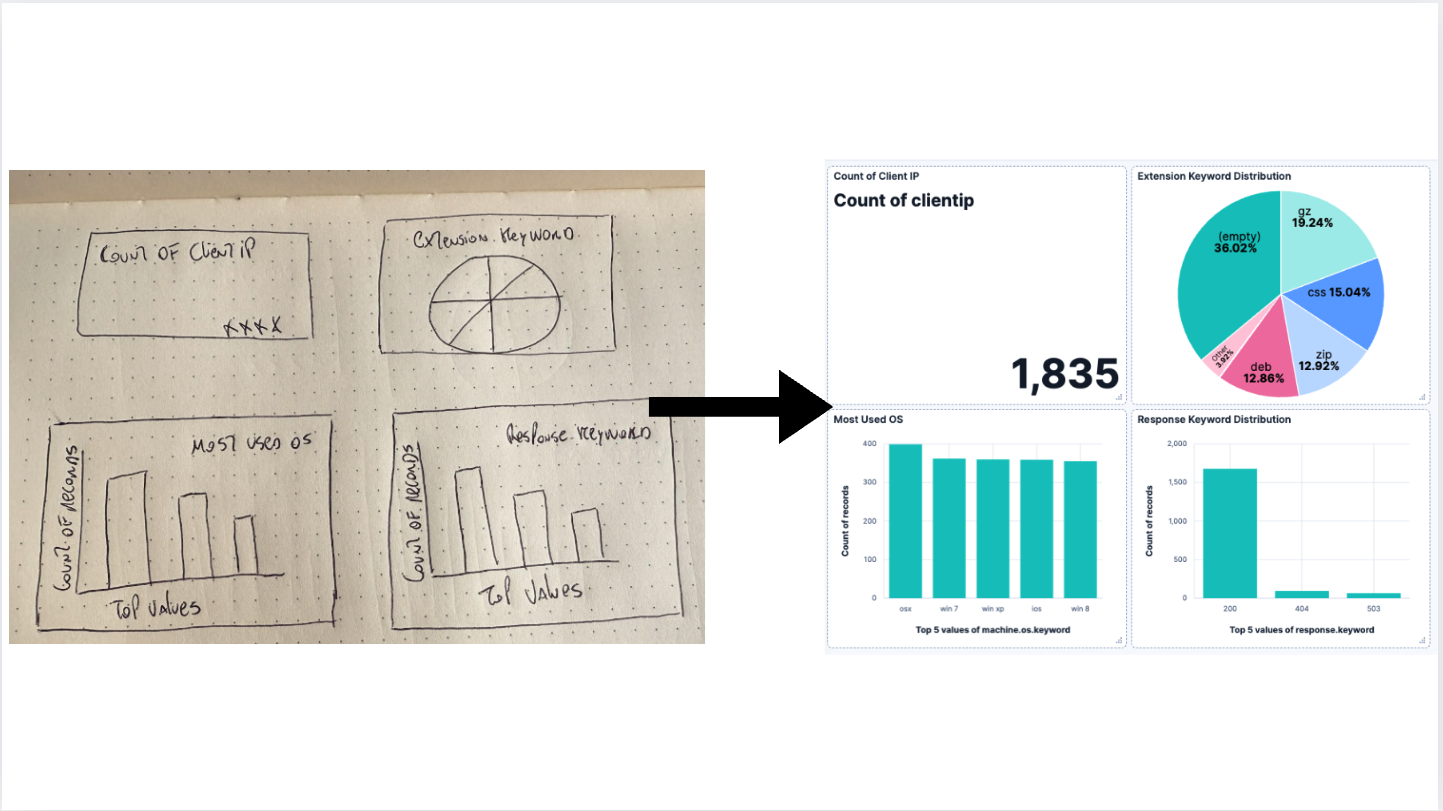

AI-powered dashboards: From a vision to Kibana

Generate a dashboard using an LLM to process an image and turn it into a Kibana Dashboard.

July 15, 2025

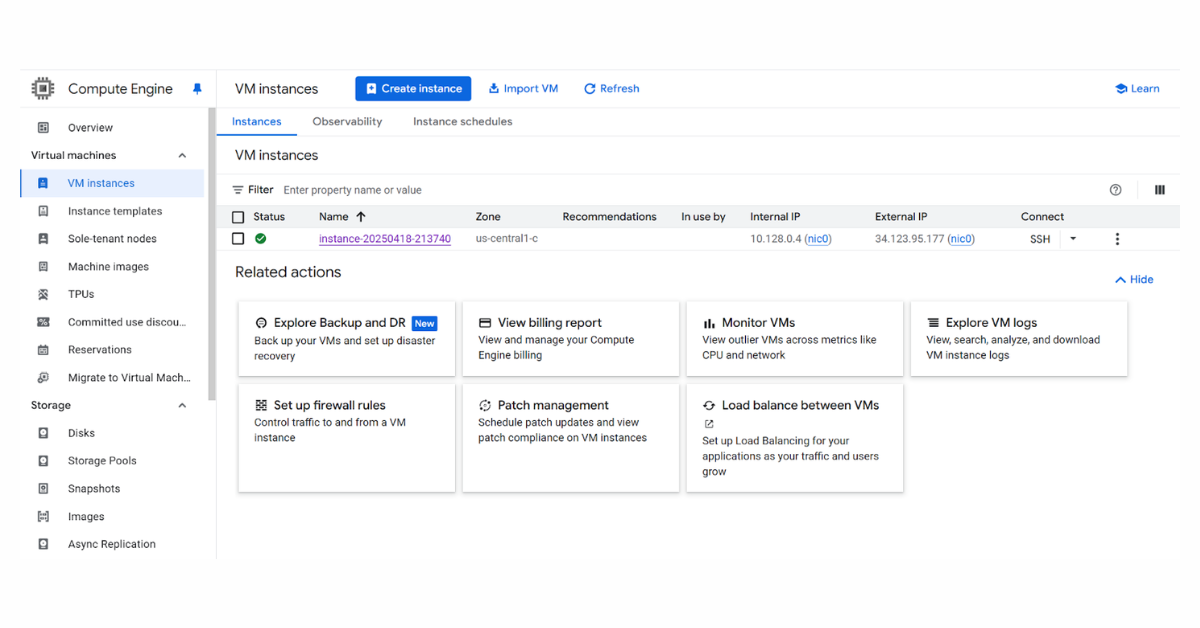

Elasticsearch made simple: GCP Google Compute Engine

Learn how to set up an Elasticsearch deployment on a Google Compute Engine VM instance with Kibana for search capabilities.

July 11, 2025

Longer context ≠ Better: Why RAG still matters

Learn why the RAG strategy is still relevant and gives the most efficient and better results.

July 4, 2025

Efficient pagination with collapse and cardinality in Elasticsearch

Deduplicating product variants in Elasticsearch? Here’s how to determine the correct pagination.

June 26, 2025

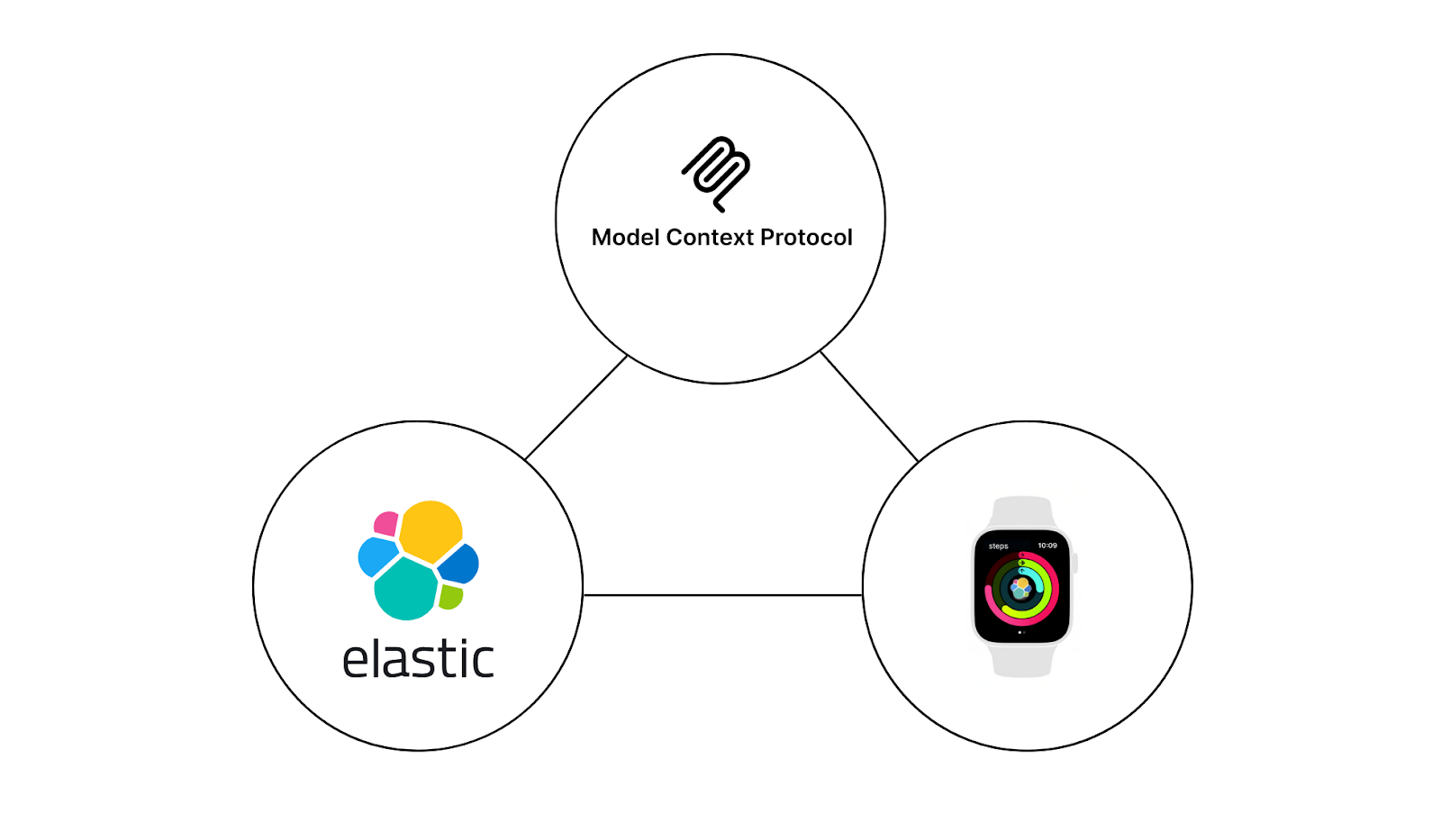

Building an MCP server with Elasticsearch for real health data

Learn learn how to build an MCP server using FastMCP and Elasticsearch to manage and search data.