In this article, we will discuss how to parse JSON fields in Elasticsearch, which is a common requirement when dealing with log data or other structured data formats. We will cover the following topics:

- Ingesting JSON data into Elasticsearch

- Using an Ingest Pipeline to parse JSON fields

- Querying and aggregating JSON fields

1. Ingesting JSON data into Elasticsearch

When ingesting JSON data into Elasticsearch, it is essential to ensure that the data is properly formatted and structured. Elasticsearch can automatically detect and map JSON fields, but it is recommended to define an explicit mapping for better control over the indexing process.

To create an index with a custom mapping, you can use the following API call:

PUT /my_index

{

"mappings": {

"properties": {

"message": {

"type": "keyword"

},

"json_field": {

"properties": {

"field1": {

"type": "keyword"

},

"field2": {

"type": "integer"

}

}

}

}

}

}In this example, we create an index called my_index with a custom mapping for a JSON field named json_field.

2. Using an Ingest Pipeline to parse JSON fields

If your JSON data is stored as a string within a field, you can use the Ingest Pipeline feature in Elasticsearch to parse the JSON string and extract the relevant fields. The Ingest Pipeline provides a set of built-in processors, including the json processor, which can be used to parse JSON data.

To create an ingest pipeline with the json processor, use the following API call:

PUT _ingest/pipeline/json_parser

{

"description": "Parse JSON field",

"processors": [

{

"json": {

"field": "message",

"target_field": "json_field"

}

}

]

}In this example, we create an ingest pipeline called json_parser that parses the JSON string stored in the message field and stores the resulting JSON object in a new field called json_field.

To index a document using this pipeline, use the following API call:

POST /my_index/_doc?pipeline=json_parser

{

"message": "{\"field1\": \"value1\", \"field2\": 42}"

}The document will be indexed with the parsed JSON fields:

{

"_index": "my_index",

"_type": "_doc",

"_id": "1",

"_source": {

"message": "{\"field1\": \"value1\", \"field2\": 42}",

"json_field": {

"field1": "value1",

"field2": 42

}

}

}3. Querying and aggregating JSON fields

Once the JSON fields are indexed, you can query and aggregate them using the Elasticsearch Query DSL. For example, to search for documents with a specific value in the field1 subfield, you can use the following query:

POST /my_index/_search

{

"query": {

"term": {

"json_field.field1": "value1"

}

}

}To aggregate the values of the field2 subfield, you can use the following aggregation:

POST /my_index/_search

{

"size": 0,

"aggs": {

"field2_sum": {

"sum": {

"field": "json_field.field2"

}

}

}Bonus: How to deal with unparsed JSON data?

If you are in the situation where you have already ingested unparsed JSON data into a text/keyword field, there’s a way to extract the JSON data without having to reindex everything from scratch.

You can leverage the Update by Query API with the ingest pipeline developed in section 2. But before running that update, you’ll first need to update your index mapping similarly to what we did in section 1 to add the json_field mapping, by running the command below:

PUT /my_index/_mapping

{

"properties": {

"json_field": {

"properties": {

"field1": {

"type": "keyword"

},

"field2": {

"type": "integer"

}

}

}

}

}When done, you can simply run the command below, which will iterate over all documents in your index, extract the JSON from the message field and index the parsed JSON data into the json_field object.

POST /my_index/_update_by_query?pipeline=json_parserConclusion

In conclusion, parsing JSON fields in Elasticsearch can be achieved using custom mappings, the Ingest Pipeline feature, and the Elasticsearch Query DSL. By following these steps, you can efficiently index, query, and aggregate JSON data in your Elasticsearch cluster.

Ready to try this out on your own? Start a free trial.

Want to get Elastic certified? Find out when the next Elasticsearch Engineer training is running!

Related content

October 31, 2025

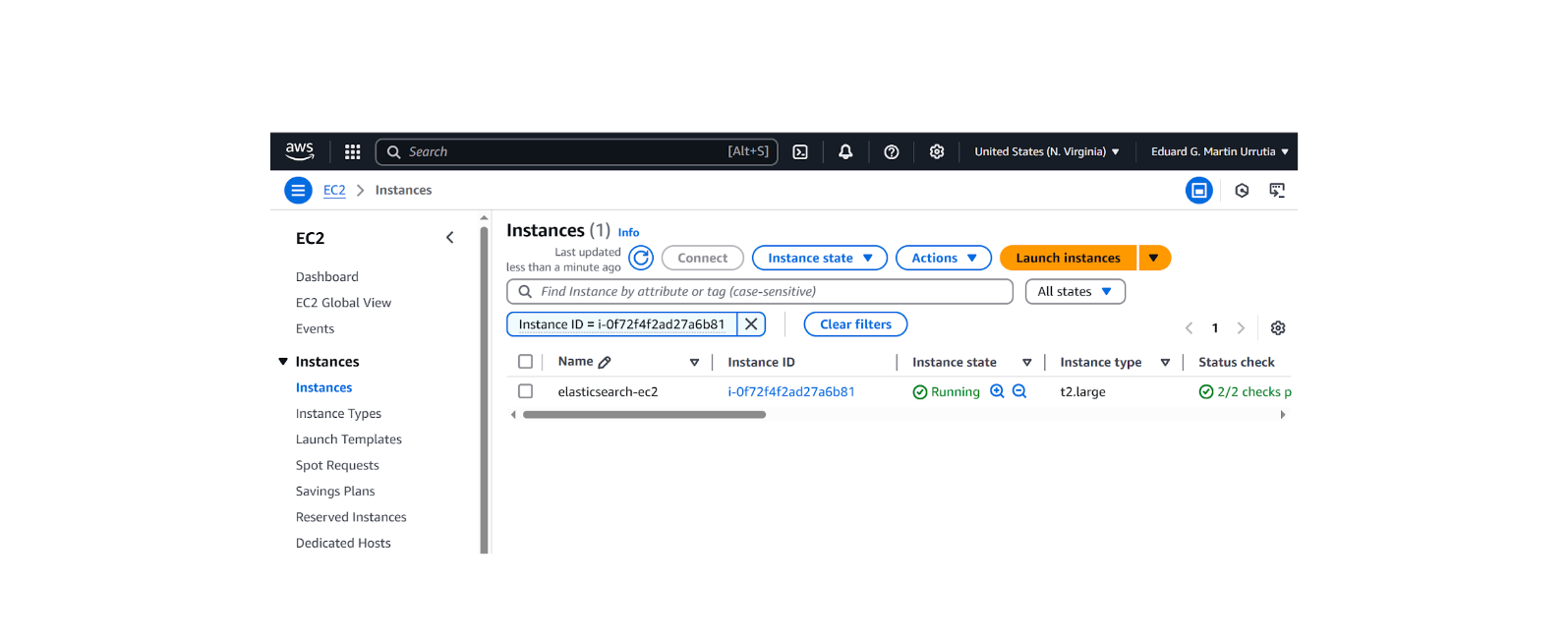

How to install and configure Elasticsearch on AWS EC2

Learn how to deploy Elasticsearch and Kibana on an Amazon EC2 instance in this step-by-step guide.

October 29, 2025

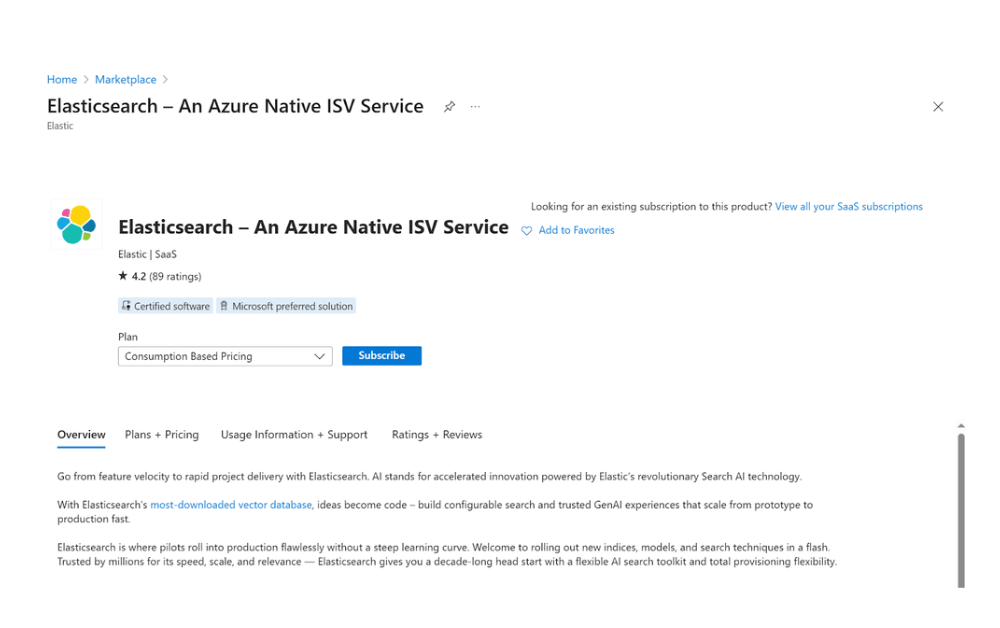

How to deploy Elasticsearch on an Azure Virtual Machine

Learn how to deploy Elasticsearch on Azure VM with Kibana for full control over your Elasticsearch setup configuration.

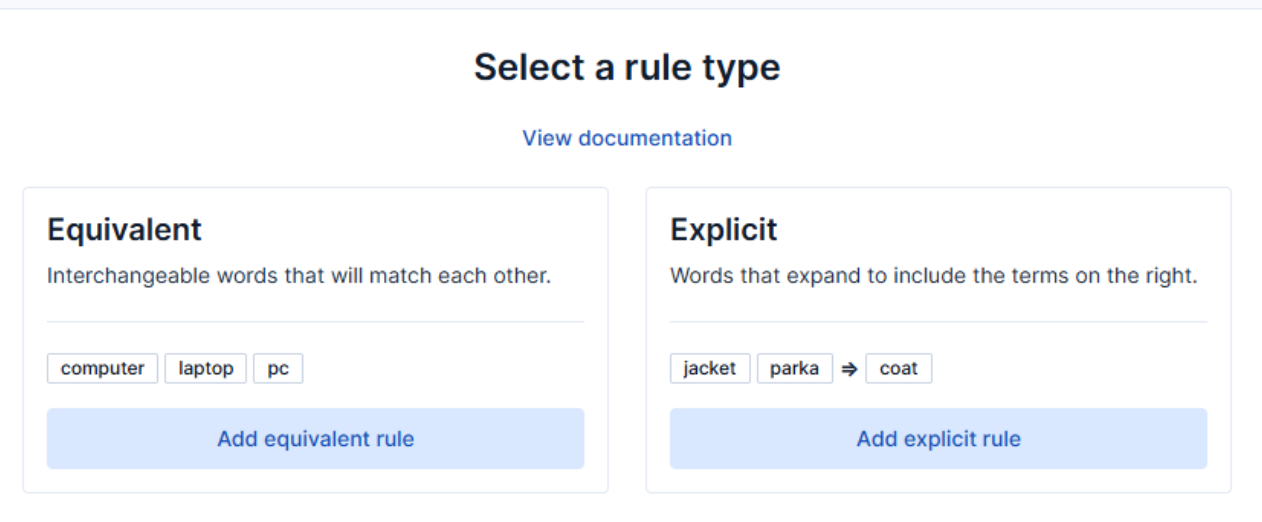

How to use the Synonyms UI to upload and manage Elasticsearch synonyms

Learn how to use the Synonyms UI in Kibana to create synonym sets and assign them to indices.

October 8, 2025

How to reduce the number of shards in an Elasticsearch Cluster

Learn how Elasticsearch shards affect cluster performance in this comprehensive guide, including how to get the shard count, change it from default, and reduce it if needed.

October 3, 2025

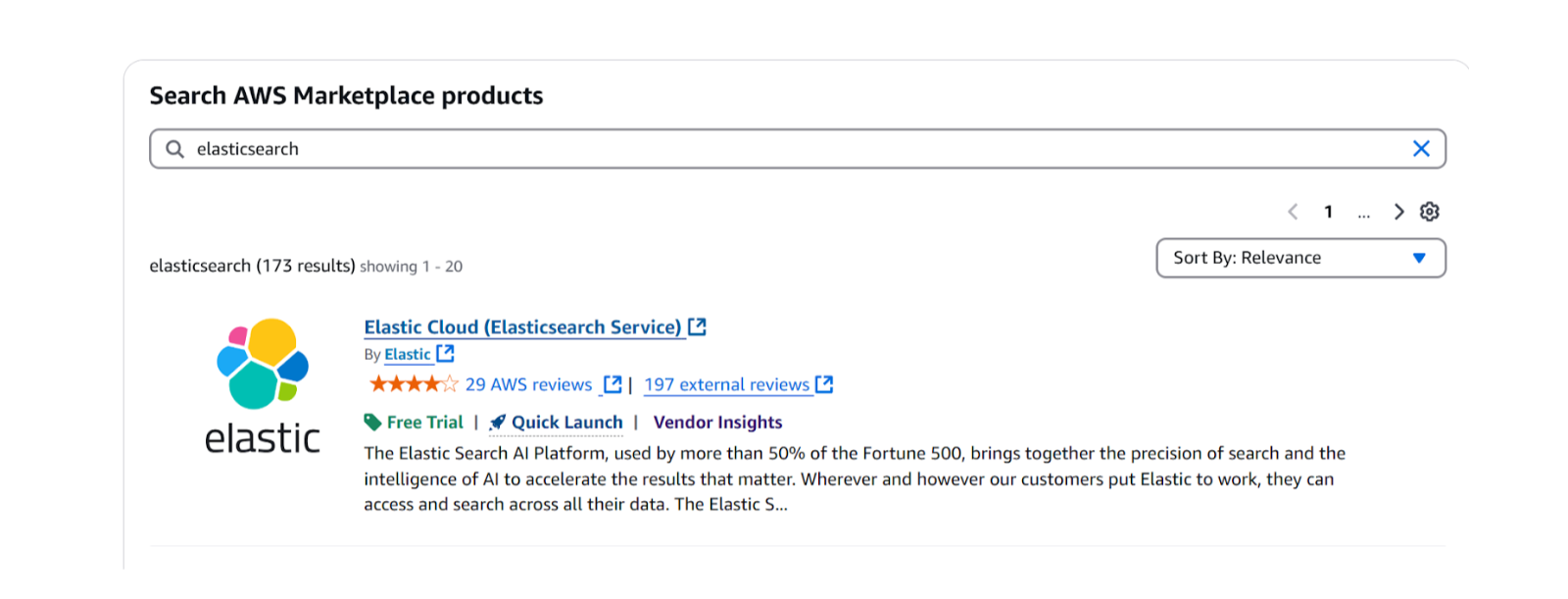

How to deploy Elasticsearch on AWS Marketplace

Learn how to set up and run Elasticsearch using Elastic Cloud Service on AWS Marketplace in this step-by-step guide.